一、Tensor 之间的运算规则

- 相同大小 Tensor 之间的任何算术运算都会将运算应用到元素级

- 不同大小 Tensor(要求dimension 0 必须相同) 之间的运算叫做广播(broadcasting)

- Tensor 与 Scalar(0维 tensor) 间的算术运算会将那个标量值传播到各个元素

- Note: TensorFLow 在进行数学运算时,一定要求各个 Tensor 数据类型一致

二、常用操作符和基本数学函数

大多数运算符都进行了重载操作,使我们可以快速使用 (+ - * /) 等,但是有一点不好的是使用重载操作符后就不能为每个操作命名了。

tf.add(x, y, name=None)

tf.subtract(x, y, name=None)

tf.multiply(x, y, name=None)

tf.divide(x, y, name=None)

tf.mod(x, y, name=None)

tf.pow(x, y, name=None)

tf.square(x, name=None)

tf.sqrt(x, name=None)

tf.exp(x, name=None)

tf.log(x, name=None)

tf.negative(x, name=None)

tf.sign(x, name=None)

tf.reciprocal(x, name=None)

tf.abs(x, name=None)

tf.round(x, name=None)

tf.ceil(x, name=None)

tf.floor(x, name=None)

tf.rint(x, name=None)

tf.maximum(x, y, name=None)

tf.minimum(x, y, name=None)

tf.cos(x, name=None)

tf.sin(x, name=None)

tf.tan(x, name=None)

tf.acos(x, name=None)

tf.asin(x, name=None)

tf.atan(x, name=None)

tf.div(x, y, name=None)

tf.truediv(x, y, name=None)

tf.floordiv(x, y, name=None)

tf.realdiv(x, y, name=None)

tf.truncatediv(x, y, name=None)

tf.floor_div(x, y, name=None)

tf.truncatemod(x, y, name=None)

tf.floormod(x, y, name=None)

tf.cross(x, y, name=None)

tf.add_n(inputs, name=None)

tf.squared_difference(x, y, name=None)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

三、矩阵数学函数

tf.matmul(a, b, transpose_a=False, transpose_b=False, adjoint_a=False, adjoint_b=False, a_is_sparse=False, b_is_sparse=False, name=None)

tf.transpose(a, perm=None, name='transpose')

tf.matrix_transpose(a, name='matrix_transpose')

tf.trace(x, name=None)

tf.matrix_determinant(input, name=None)

tf.matrix_inverse(input, adjoint=None, name=None)

tf.svd(tensor, full_matrices=False, compute_uv=True, name=None)

tf.qr(input, full_matrices=None, name=None)

tf.norm(tensor, ord='euclidean', axis=None, keep_dims=False, name=None)

tf.eye(num_rows, num_columns=None, batch_shape=None, dtype=tf.float32, name=None)

tf.eye(2)

==> [[1., 0.],

[0., 1.]]

batch_identity = tf.eye(2, batch_shape=[3])

tf.eye(2, num_columns=3)

==> [[ 1., 0., 0.],

[ 0., 1., 0.]]

tf.diag(diagonal, name=None)

tf.diag(diagonal) ==> [[1, 0, 0, 0]

[0, 2, 0, 0]

[0, 0, 3, 0]

[0, 0, 0, 4]]

tf.diag_part

tf.matrix_diag

tf.matrix_diag_part

tf.matrix_band_part

tf.matrix_set_diag

tf.cholesky

tf.cholesky_solve

tf.matrix_solve

tf.matrix_triangular_solve

tf.matrix_solve_ls

tf.self_adjoint_eig

tf.self_adjoint_eigvals

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

四、Reduction:reduce various dimensions of a tensor

tf.reduce_sum(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_sum(x) ==> 6

tf.reduce_sum(x, 0) ==> [2, 2, 2]

tf.reduce_sum(x, 1) ==> [3, 3]

tf.reduce_sum(x, 1, keep_dims=True) ==> [[3], [3]]

tf.reduce_sum(x, [0, 1]) ==> 6

tf.reduce_mean(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_max(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_min(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_prod(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_all(input_tensor, axis=None, keep_dims=False, name=None)

tf.reduce_any(input_tensor, axis=None, keep_dims=False, name=None)

-------------------------------------------

-------------------------------------------

tf.accumulate_n(inputs, shape=None, tensor_dtype=None, name=None)

tf.reduce_logsumexp(input_tensor, axis=None, keep_dims=False, name=None)

tf.count_nonzero(input_tensor, axis=None, keep_dims=False, name=None)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

tf.cumsum(x, axis=0, exclusive=False, reverse=False, name=None)

tf.cumsum([a, b, c])

tf.cumsum([a, b, c], exclusive=True)

tf.cumsum([a, b, c], reverse=True)

tf.cumsum([a, b, c], exclusive=True, reverse=True)

tf.cumprod(x, axis=0, exclusive=False, reverse=False, name=None)

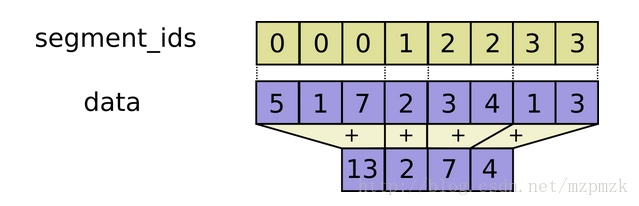

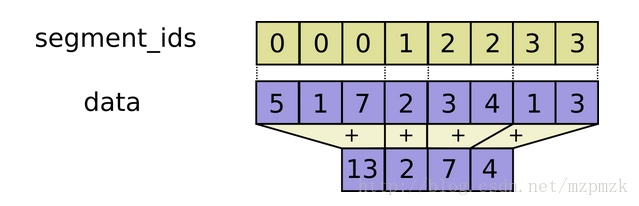

六、Segmentation

沿着第一维(x 轴)根据 segment_ids(list)分割好相应的数据后再进行操作

tf.segment_sum(data, segment_ids, name=None)

m = tf.constant([5,1,7,2,3,4,1,3])

s_id = [0,0,0,1,2,2,3,3]

s.run(tf.segment_sum(m, segment_ids=s_id))

>array([13, 2, 7, 4], dtype=int32)

tf.segment_mean(data, segment_ids, name=None)

tf.segment_max(data, segment_ids, name=None)

tf.segment_min(data, segment_ids, name=None)

tf.segment_prod(data, segment_ids, name=None)

tf.unsorted_segment_sum

tf.sparse_segment_sum

tf.sparse_segment_mean

tf.sparse_segment_sqrt_n

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

七、 序列比较与索引提取

tf.setdiff1d(x, y, index_dtype=tf.int32, name=None)

tf.unique(x, out_idx=None, name=None)

tf.where(condition, x=None, y=None, name=None)

tf.argmax(input, axis=None, name=None, output_type=tf.int64)

tf.argmin(input, axis=None, name=None, output_type=tf.int64)

tf.invert_permutation(x, name=None)

tf.edit_distance

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

八、参考资料

http://blog.csdn.net/mzpmzk/article/details/77337851

已为社区贡献9条内容

已为社区贡献9条内容

所有评论(0)