Airflow的docker-compose.yaml安装

文章目录

前言

由于airflow的组件多,相互关联性强,特别在使用celery调度方式的情况下,另外加上airflow的版本迭代确实快。所以本地固有安装不是一种良好的维护方式。所以特此推荐使用docker-compose.yaml进行安装,一站式启停

基础配置

创建airflow主文件夹

该文件夹用于映射docker在宿主机器上的一个根目录,后面设置为$AIRFLOW_HOME

mkdir /home/airflow;

创建基础文件夹

cd /home/airflow;

mkdir -p ./dags ./logs ./plugins ./config;

这是官方解释

./dags - you can put your DAG files here.

./logs - contains logs from task execution and scheduler.

./config - you can add custom log parser or add airflow_local_settings.py to configure cluster policy.

./plugins - you can put your custom plugins here.

- 这里个人业务需求,需放入src文件夹和run.py文件

创建.env文件

该文件主要用于docker环境里的权限管理

echo -e "AIRFLOW_UID=$(id -u)" > .env;

- 对于.env文件的解释。官方是这么解释:

On Linux, the quick-start needs to know your host user id and needs to have group id set to 0. Otherwise the files created in dags, logs and plugins will be created with root user ownership. You have to make sure to configure them for the docker-compose;

UID of the user to run Airflow containers as. Override if you want to use non-default Airflow UID (for example when you map folders from host, it should be set to result of id -u call. When it is changed, a user with the UID is created with default name inside the container and home of the use is set to /airflow/home/ in order to share Python libraries installed there. This is in order to achieve the OpenShift compatibility. See more in the Arbitrary Docker User

官方解释,个人理解是给予创建以上文件夹的权限,防止到时候容器里调用会报错

- .env还有另外一个作用,你可以设置一些airflow的官方参数,比如

AIRFLOW_UID=0

AIRFLOW__OPERATORS__DEFAULT_QUEUE=worker

添加了AIRFLOW__OPERATORS__DEFAULT_QUEUE参数,用于作为执行器的默认队列,在docker启动的时候,就会加载这个参数到环境变量里,airflow默认会去环境变量里获取并引用,所以.env可以用于airflow的参数设置

区分测试环境和正式环境

- 对于业务开发中,肯定需要区分测试和正式环境,对于不同的环境执行不同的数据库配置或者系统配置,对此,可以通过.env来修改容器里的环境变量

设置linux系统环境变量

首先对于区分正式和测试的环境变量,我是想通过不同的主机进行区分的,所以我是希望跟主机的全局系统环境变量保持一致的。所以我们设置/etc/environment来实现设置全局变量

vim /etc/environment

填入你的环境变量,比如:

RUN_ENV=official

然后:wq保存修改,在重载一下变量

source /etc/environment

测试一下,看下是否是你设置的值,如果没有变化,请重新链接一下shell

echo $RUN_ENV

official

.env添加系统环境变量,让其带到docker容器里

打开.env文件,然后添加一行配置,如下:

RUN_ENV=${RUN_ENV:-test}

- 这里说明一下,这是给容器配置的系统环境变量配置RUN_ENV这个环境变量,值为取宿主机器的linux环境变量的RUN_ENV,如果没有则取默认值teset

重新构建容器

docker compose build

重新启动容器

docker compose up

验证变量是否同步与容器中

先进入容器,因为我们业务代码一般运行在worker容器里,所以进入worker容器即可

docker exec -u airflow -it airflow-airflow-worker-1 bash

echo $RUN_ENV

最后再在你的代码里通过读取环境变量,即可判断执行那个配置了

配置docker-compose.yaml

获取docker-compose.yaml文件

注意版本号,请对应好最新的airflow版本,比如,官方文档现在是2.8.0,但写这篇文章的时候,airflow已经更新到了2.8.1,而且上架dockerhub了,那么你就需要把url里面的2.8.0对应改成2.8.1,我下面就不改了,你们自行留意一下

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.8.0/docker-compose.yaml'

修改对应python版本镜像

由于你个人项目环境使用的python版本,可能和airflow的不一致,会导致很多异常,所以你需要匹配你项目的python版本的airflow。

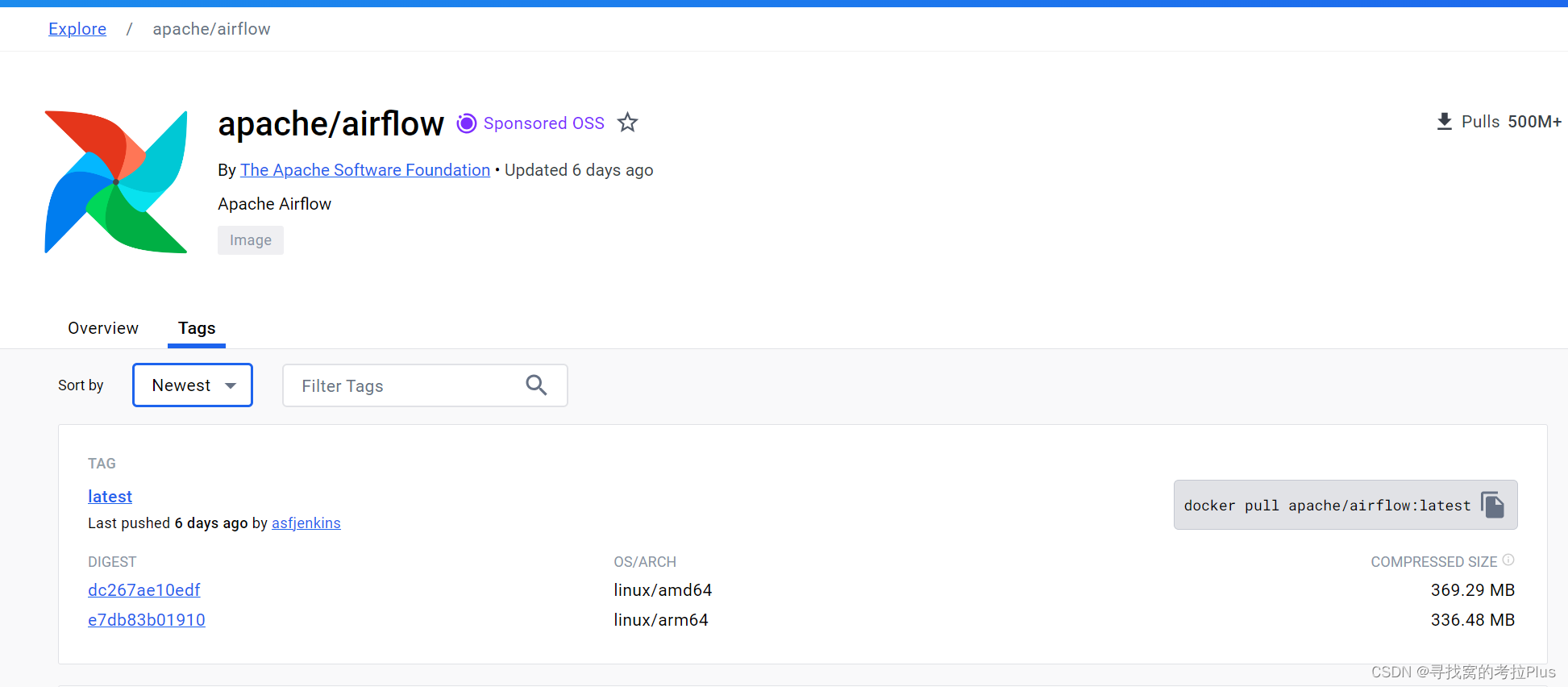

注意!这里有一点,默认是python3.8版本的镜像,如果想用python3.11版本的docker镜像,可以上到dockerhub上面找相应镜像名称

- dockerhub项目传送门

- 认准这个项目,很多另外的项目也叫airflow,别被忽悠

- 找到对应的tag,在拉取指令中取出镜像名称:docker pull apache/airflow:2.8.1-python3.11

- 这里也推荐使用apache/airflow:latest-python3.11这个镜像,就一直是python3.11版本的最新版本airflow

打开yaml文件,修改下面参数

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.8.1}

修改为

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:latest-python3.11}

这样该docker-compose.yaml文件安装出来的airflow就会是python3.11环境的最新版本airflow

设置自己的数据库配置

如果是自己搭建的分布式,最好就不要使用里面数据库配置,用自己的,这里个人是自己创建了一个docker的postgresql和redis,然后修改了以下配置:

AIRFLOW__DATABASE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__RESULT_BACKEND: db+postgresql://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__BROKER_URL: redis://:@redis:6379/0

非分布式的,可以略过此步

端口设置

对于原yaml文件里面的端口映射,很多都用到了比较常用的一些端口,对于个人业务需求,进行了部分修改

- 这块看个人需求,主要因为5555和8080,在我服务器上有其他docker在使用,所以修改了一下,如果是单机只挂载airflow,端口没冲突,此步操作可以略过

- web服务,映射出来的端口8080改成了8100:

airflow-webserver:

<<: *airflow-common

command: webserver

ports:

- "8100:8080"

- celery监控flower,映射出来的端口5555改成了8101

flower:

<<: *airflow-common

command: celery flower

profiles:

- flower

ports:

- "8101:5555"

- postgres数据库添加对外接口,非必要,个人查看airflow数据情况使用,但我个人是用的内网,所以是安全的,自己配置请留意,这里不推荐打开

postgres:

image: postgres:13

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

ports:

- "5432:5432"

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 10s

retries: 5

start_period: 5s

restart: always

- redis数据库添加对外接口,非必要,个人查看airflow数据情况使用,但我个人是用的内网,所以是安全的,自己配置请留意,这里不推荐打开

redis:

image: redis:latest

expose:

- 6379

ports:

- "6380:6379"

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 30s

retries: 50

start_period: 30s

restart: always

添加权限管理文件

上面创建的.env文件,现在需要在docker-compose.yaml文件里面配置进去,记得加在volumes:配置项上方

env_file:

- .env

增加两个映射卷

对于项目开发,dags是存放你的调度dags的文件,但dag调度的时候还是需要你的脚本代码文件,所以这里添加一个src文件夹用户存放你的脚本代码文件。

另外,个人设计方面,每个脚本的入口都是放在run.py文件里,进行统一的调度输出。所以再添加一个run.py文件

volumes:

- ${AIRFLOW_PROJ_DIR:-.}/dags:/opt/airflow/dags

- ${AIRFLOW_PROJ_DIR:-.}/logs:/opt/airflow/logs

- ${AIRFLOW_PROJ_DIR:-.}/config:/opt/airflow/config

- ${AIRFLOW_PROJ_DIR:-.}/plugins:/opt/airflow/plugins

添加两个卷后

volumes:

- ${AIRFLOW_PROJ_DIR:-.}/dags:/opt/airflow/dags

- ${AIRFLOW_PROJ_DIR:-.}/logs:/opt/airflow/logs

- ${AIRFLOW_PROJ_DIR:-.}/config:/opt/airflow/config

- ${AIRFLOW_PROJ_DIR:-.}/plugins:/opt/airflow/plugins

- ${AIRFLOW_PROJ_DIR:-.}/src:/opt/airflow/src

- ${AIRFLOW_PROJ_DIR:-.}/run.py:/opt/airflow/run.py

这里要特别注意run.py文件要先放进去,因为如果没有放一个文件进去占位置,docker build的时候会默认创建一个run.py的文件夹!这就会出错了

初始化数据库

通过以下指令,先对数据库进行初始化

docker compose up airflow-init

执行后输出

[+] Running 43/9

✔ airflow-init 22 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 116.1s

✔ redis 6 layers [⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 26.3s

✔ postgres 12 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 32.1s

[+] Running 4/3

✔ Network airflow_default Created 0.2s

✔ Container airflow-postgres-1 Created 0.6s

✔ Container airflow-redis-1 Created 0.6s

✔ Container airflow-airflow-init-1 Created 0.0s

Attaching to airflow-airflow-init-1

airflow-airflow-init-1 | The container is run as root user. For security, consider using a regular user account.

airflow-airflow-init-1 |

airflow-airflow-init-1 | DB: postgresql+psycopg2://airflow:***@postgres/airflow

airflow-airflow-init-1 | Performing upgrade to the metadata database postgresql+psycopg2://airflow:***@postgres/airflow

airflow-airflow-init-1 | [2024-01-25T03:10:12.140+0000] {migration.py:216} INFO - Context impl PostgresqlImpl.

airflow-airflow-init-1 | [2024-01-25T03:10:12.141+0000] {migration.py:219} INFO - Will assume transactional DDL.

airflow-airflow-init-1 | [2024-01-25T03:10:12.143+0000] {migration.py:216} INFO - Context impl PostgresqlImpl.

airflow-airflow-init-1 | [2024-01-25T03:10:12.144+0000] {migration.py:219} INFO - Will assume transactional DDL.

airflow-airflow-init-1 | INFO [alembic.runtime.migration] Context impl PostgresqlImpl.

airflow-airflow-init-1 | INFO [alembic.runtime.migration] Will assume transactional DDL.

airflow-airflow-init-1 | INFO [alembic.runtime.migration] Running stamp_revision -> 88344c1d9134

airflow-airflow-init-1 | Database migrating done!

airflow-airflow-init-1 | /home/airflow/.local/lib/python3.11/site-packages/flask_limiter/extension.py:336 UserWarning: Using the in-memory storage for tracking rate limits as no storage was explicitly specified. This is not recommended for production use. See: https://flask-limiter.readthedocs.io#configuring-a-storage-backend for documentation about configuring the storage backend.

airflow-airflow-init-1 | [2024-01-25T03:10:15.202+0000] {override.py:1369} INFO - Inserted Role: Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.206+0000] {override.py:1369} INFO - Inserted Role: Public

airflow-airflow-init-1 | [2024-01-25T03:10:15.437+0000] {override.py:868} WARNING - No user yet created, use flask fab command to do it.

airflow-airflow-init-1 | [2024-01-25T03:10:15.474+0000] {override.py:1769} INFO - Created Permission View: can edit on Passwords

airflow-airflow-init-1 | [2024-01-25T03:10:15.479+0000] {override.py:1820} INFO - Added Permission can edit on Passwords to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.484+0000] {override.py:1769} INFO - Created Permission View: can read on Passwords

airflow-airflow-init-1 | [2024-01-25T03:10:15.487+0000] {override.py:1820} INFO - Added Permission can read on Passwords to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.498+0000] {override.py:1769} INFO - Created Permission View: can edit on My Password

airflow-airflow-init-1 | [2024-01-25T03:10:15.502+0000] {override.py:1820} INFO - Added Permission can edit on My Password to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.507+0000] {override.py:1769} INFO - Created Permission View: can read on My Password

airflow-airflow-init-1 | [2024-01-25T03:10:15.510+0000] {override.py:1820} INFO - Added Permission can read on My Password to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.519+0000] {override.py:1769} INFO - Created Permission View: can edit on My Profile

airflow-airflow-init-1 | [2024-01-25T03:10:15.522+0000] {override.py:1820} INFO - Added Permission can edit on My Profile to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.527+0000] {override.py:1769} INFO - Created Permission View: can read on My Profile

airflow-airflow-init-1 | [2024-01-25T03:10:15.531+0000] {override.py:1820} INFO - Added Permission can read on My Profile to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.556+0000] {override.py:1769} INFO - Created Permission View: can create on Users

airflow-airflow-init-1 | [2024-01-25T03:10:15.560+0000] {override.py:1820} INFO - Added Permission can create on Users to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.565+0000] {override.py:1769} INFO - Created Permission View: can read on Users

airflow-airflow-init-1 | [2024-01-25T03:10:15.568+0000] {override.py:1820} INFO - Added Permission can read on Users to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.573+0000] {override.py:1769} INFO - Created Permission View: can edit on Users

airflow-airflow-init-1 | [2024-01-25T03:10:15.576+0000] {override.py:1820} INFO - Added Permission can edit on Users to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.581+0000] {override.py:1769} INFO - Created Permission View: can delete on Users

airflow-airflow-init-1 | [2024-01-25T03:10:15.584+0000] {override.py:1820} INFO - Added Permission can delete on Users to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.591+0000] {override.py:1769} INFO - Created Permission View: menu access on List Users

airflow-airflow-init-1 | [2024-01-25T03:10:15.594+0000] {override.py:1820} INFO - Added Permission menu access on List Users to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.602+0000] {override.py:1769} INFO - Created Permission View: menu access on Security

airflow-airflow-init-1 | [2024-01-25T03:10:15.606+0000] {override.py:1820} INFO - Added Permission menu access on Security to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.624+0000] {override.py:1769} INFO - Created Permission View: can create on Roles

airflow-airflow-init-1 | [2024-01-25T03:10:15.628+0000] {override.py:1820} INFO - Added Permission can create on Roles to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.633+0000] {override.py:1769} INFO - Created Permission View: can read on Roles

airflow-airflow-init-1 | [2024-01-25T03:10:15.636+0000] {override.py:1820} INFO - Added Permission can read on Roles to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.642+0000] {override.py:1769} INFO - Created Permission View: can edit on Roles

airflow-airflow-init-1 | [2024-01-25T03:10:15.645+0000] {override.py:1820} INFO - Added Permission can edit on Roles to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.650+0000] {override.py:1769} INFO - Created Permission View: can delete on Roles

airflow-airflow-init-1 | [2024-01-25T03:10:15.654+0000] {override.py:1820} INFO - Added Permission can delete on Roles to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.663+0000] {override.py:1769} INFO - Created Permission View: menu access on List Roles

airflow-airflow-init-1 | [2024-01-25T03:10:15.667+0000] {override.py:1820} INFO - Added Permission menu access on List Roles to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.683+0000] {override.py:1769} INFO - Created Permission View: can read on User Stats Chart

airflow-airflow-init-1 | [2024-01-25T03:10:15.687+0000] {override.py:1820} INFO - Added Permission can read on User Stats Chart to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.696+0000] {override.py:1769} INFO - Created Permission View: menu access on User's Statistics

airflow-airflow-init-1 | [2024-01-25T03:10:15.700+0000] {override.py:1820} INFO - Added Permission menu access on User's Statistics to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.722+0000] {override.py:1769} INFO - Created Permission View: can read on Permissions

airflow-airflow-init-1 | [2024-01-25T03:10:15.726+0000] {override.py:1820} INFO - Added Permission can read on Permissions to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.735+0000] {override.py:1769} INFO - Created Permission View: menu access on Actions

airflow-airflow-init-1 | [2024-01-25T03:10:15.738+0000] {override.py:1820} INFO - Added Permission menu access on Actions to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.758+0000] {override.py:1769} INFO - Created Permission View: can read on View Menus

airflow-airflow-init-1 | [2024-01-25T03:10:15.762+0000] {override.py:1820} INFO - Added Permission can read on View Menus to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.772+0000] {override.py:1769} INFO - Created Permission View: menu access on Resources

airflow-airflow-init-1 | [2024-01-25T03:10:15.777+0000] {override.py:1820} INFO - Added Permission menu access on Resources to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.801+0000] {override.py:1769} INFO - Created Permission View: can read on Permission Views

airflow-airflow-init-1 | [2024-01-25T03:10:15.805+0000] {override.py:1820} INFO - Added Permission can read on Permission Views to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:15.813+0000] {override.py:1769} INFO - Created Permission View: menu access on Permission Pairs

airflow-airflow-init-1 | [2024-01-25T03:10:15.817+0000] {override.py:1820} INFO - Added Permission menu access on Permission Pairs to role Admin

airflow-airflow-init-1 | [2024-01-25T03:10:16.637+0000] {override.py:1458} INFO - Added user airflow

airflow-airflow-init-1 | User "airflow" created with role "Admin"

airflow-airflow-init-1 | 2.8.1

- 默认情况下,postgres的账号密码都是airflow,redis则是密码为空,如果airflow是有开放公网,这一块记得要留意修改密码

执行完成后查看docker images,可以看到新增了3个镜像

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

apache/airflow latest-python3.11 6982e639a403 5 days ago 1.34GB

postgres 13 0896a8e0282d 2 years ago 371MB

redis latest 7614ae9453d1 2 years ago 113MB

再查看docker容器,可以看到新增了2个容器

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

596112365a38 redis:latest "docker-entrypoint.s…" 7 minutes ago Up 7 minutes (healthy) 6379/tcp airflow-redis-1

afa0e8f05ac4 postgres:13 "docker-entrypoint.s…" 7 minutes ago Up 7 minutes (healthy) 5432/tcp airflow-postgres-1

此时db环境就配置好了

启动airflow

直接启动

可以用于查看airflow运行是否正常

docker compose up

后台启动

docker compose up -d

带上flower一并启动

docker-compose --profile flower up -d

只启动某个服务

docker-compose up airflow-worker

airflow的docker常用指令

关闭

docker-compose down

重启

docker-compose restart

root用户进入容器

docker exec -it airflow-airflow-worker-1 /bin/bash

airflow用户进入容器

docker exec -it -u airflow airflow-airflow-worker-1 /bin/bash

访问airflow网页

如果你是修改过映射端口的话,那就用你的映射端口,默认是8080

http://127.0.0.1:8080

此时会要登陆,默认账号密码都是airflow

修改密码

- 点击右上角图标——Your Profile

- Reset my password

业务代码python包安装方法

简单粗暴

- 执行任务的容器是airflow-airflow-worker-1,直接进入容器pip install安装即可,需要使用airflow用户进入才可安装

docker exec -it -u airflow airflow-airflow-worker-1 /bin/bash

- 这里重点,如果root用户进去,操作pip,还是会要你用airflow用户进入才可以使用pip

root@2b4151bb054d:/opt/airflow# pip list

You are running pip as root. Please use 'airflow' user to run pip!

See: https://airflow.apache.org/docs/docker-stack/build.html#adding-a-new-pypi-package

- 镜像内安装requirements.txt,如果使用了 -i ,则要信任站点,–trusted-host mirrors.aliyun.com,如下:

pip install -r requirements.txt -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

这样安装有一个缺点就是如果你docker compose build或者更新了airflow版本,则之前安装的包都会丢失,对此还是推荐下面一种方法

优雅艺术

- 将需要的requirements.txt文件,放到airflow目录中

- 创建Dockerfile文件,输入以下内容,FROM改成你的镜像名:

FROM apache/airflow:latest-python3.11

USER airflow

COPY requirements.txt /

RUN pip install -r /requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple --trusted-host mirrors.aliyun.com

- 随后在yaml文件中,屏蔽image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.7.3-python3.11},打开build .,然后保存,如下图:

- 现阶段airflow目录下,应该是这个样子了,可以先比对一下

- 然后执行一下以下指令,对docker进行重建

docker compose build

- 验证安装结果,进入worker的容器,pip list查看

安装后的容器运行情况

通过docker ps指令查看

[root@localhost airflow] docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

853c717d4fdb redis:latest "docker-entrypoint.s…" 5 minutes ago Up 5 minutes (healthy) 0.0.0.0:6380->6379/tcp, :::6380->6379/tcp airflow-redis-1

33d6899c0aa8 postgres:13 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes (healthy) 0.0.0.0:5432->5432/tcp, :::5432->5432/tcp airflow-postgres-1

bb26e290270a airflow-airflow-webserver "/usr/bin/dumb-init …" 5 minutes ago Up 5 minutes (healthy) 0.0.0.0:8100->8080/tcp, :::8100->8080/tcp airflow-airflow-webserver-1

a6fc286cd79b airflow-airflow-triggerer "/usr/bin/dumb-init …" 5 minutes ago Up 5 minutes (unhealthy) 8080/tcp airflow-airflow-triggerer-1

6b60e663f16d airflow-airflow-scheduler "/usr/bin/dumb-init …" 5 minutes ago Up 5 minutes (healthy) 8080/tcp airflow-airflow-scheduler-1

e97d9eca40dd airflow-airflow-worker "/usr/bin/dumb-init …" 5 minutes ago Up 5 minutes (unhealthy) 8080/tcp airflow-airflow-worker-1

8dac2db6105b apache/airflow:latest-python3.11 "/usr/bin/dumb-init …" 52 minutes ago Up 52 minutes (healthy) 8080/tcp, 0.0.0.0:8101->5555/tcp, :::8101->5555/tcp airflow-flower-1

这是正常情况下的一整套airflow,对于flower是需要你单独启动的

知识点与小技巧

没有报异常,但你的dag就是不显示

如果那里都没有报异常,但dag就是不显示,那就查看docker日志,重点是scheduler

docker logs airflow-airflow-scheduler-1

如何当即加载dags

产生了文件变动就当即会被加载,比如“保存”一下

对于dag在执行的任务如何强制结束

设置状态为faili即可

PythonVirtualenvOperator的特性

- PythonVirtualenvOperator,可以单独创建虚拟环境,并且将你的脚本单独的复制了一份。而不是直接执行你的代码

[2023-12-13, 07:50:43 UTC] {process_utils.py:182} INFO - Executing cmd: /tmp/venv3gux3z4y/bin/python /tmp/venv3gux3z4y/script.py /tmp/venv3gux3z4y/script.in /tmp/venv3gux3z4y/script.out /tmp/venv3gux3z4y/string_args.txt /tmp/venv3gux3z4y/termination.log

[2023-12-13, 07:50:43 UTC] {process_utils.py:186} INFO - Output:

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - Traceback (most recent call last):

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - File "/tmp/venv3gux3z4y/script.py", line 30, in <module>

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - res = import_package(*arg_dict["args"], **arg_dict["kwargs"])

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - File "/tmp/venv3gux3z4y/script.py", line 26, in import_package

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - from src.clean.clean_product.AsinDetail2Recent30Day import AsinDetail2Recent30Day

[2023-12-13, 07:50:44 UTC] {process_utils.py:190} INFO - ModuleNotFoundError: No module named 'src'

File “/tmp/venv3gux3z4y/script.py”, line 30, in ,很明显可以看出,它是在/tmp目录下随机创建一个文件夹venv3gux3z4y,然后再去执行你的脚本

print打印添加到airflow的log里

代码中把sys.stdout添加到你的logger里

logger.add(sink=sys.stdout)

BashOperator和PythonOperator的python解释器区别

- BashOperator的python编译器是用worker的airflow用户的python编译器:

- PythonOperator是用的虚拟环境的python编辑器

清理环境

对于想完整清理掉airflow的安装,官方给出的建议:

- The docker-compose environment we have prepared is a “quick-start” one. It was not designed to be used in production and it has a number of caveats - one of them being that the best way to recover from any problem is to clean it up and restart from scratch.

The best way to do this is to:- Run docker compose down --volumes --remove-orphans command in the directory you downloaded the docker-compose.yaml file

- Remove the entire directory where you downloaded the docker-compose.yaml file rm -rf ‘’

- Run through this guide from the very beginning, starting by re-downloading the docker-compose.yaml file

相互学习

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)