【学习总结】python transformers 预处理 YelpReviewFull 数据集,并展示

transformers

huggingface/transformers: 是一个基于 Python 的自然语言处理库,它使用了 PostgreSQL 数据库存储数据。适合用于自然语言处理任务的开发和实现,特别是对于需要使用 Python 和 PostgreSQL 数据库的场景。特点是自然语言处理库、Python、PostgreSQL 数据库。

项目地址:https://gitcode.com/gh_mirrors/tra/transformers

·

1. 数据简介

Yelp是一家总部位于美国旧金山的跨国公司,它开发Yelp.com网站和Yelp移动应用程序。Yelp是一个用户对餐馆等场所进行评价的网站。(维基百科)

Yelp 评论数据集包含来自 Yelp 的评论。 它是从 Yelp 数据集挑战 2015 数据中提取的。

Yelp 评论数据集

支持的任务:

text-classification、sentiment-classification

该数据集主要用于文本分类:给定文本,预测情感。

数据结构:

一个数据包括文本和相应的标签:

- ‘text’: 评论文本使用双引号(“)转义,任何内部双引号都通过2个双引号(”")转义。换行符使用反斜杠后跟一个 “n” 字符转义,即 “\n”。

- ‘label’: 对应于评论的分数(0-4)0 表示1星,4表示5星

{'label': 0,

'text': "This place is absolute garbage... Half of the tees are not available, including all the grass tees. It is cash only, and they sell the last bucket at 8, despite having lights. And if you finish even a minute after 8, don't plan on getting a drink. The vending machines are sold out (of course) and they sell drinks inside, but close the drawers at 8 on the dot. There are weeds grown all over the place. I noticed some sort of batting cage, but it looks like those are out of order as well. Someone should buy this place and turn it into what it should be."}

2. 数据显示

load_dataset.py :

from datasets import load_dataset

dataset = load_dataset("yelp_review_full")

print(dataset)

print(type(dataset))

print(dataset["train"][11])

输出结果:

DatasetDict({

train: Dataset({

features: ['label', 'text'],

num_rows: 650000

})

test: Dataset({

features: ['label', 'text'],

num_rows: 50000

})

})

<class 'datasets.dataset_dict.DatasetDict'>

{'label': 0, 'text': "This place is absolute garbage... Half of the tees are not available, including all the grass tees. It is cash only, and they sell the last bucket at 8, despite having lights. And if you finish even a minute after 8, don't plan on getting a drink. The vending machines are sold out (of course) and they sell drinks inside, but close the drawers at 8 on the dot. There are weeds grown all over the place. I noticed some sort of batting cage, but it looks like those are out of order as well. Someone should buy this place and turn it into what it should be."}

随机显示数据:

import random

import pandas as pd

import datasets

from IPython.display import display, HTML

def show_random_elements(dataset, num_examples=10):

assert num_examples <= len(dataset), "num_examples cannot exceed the length of the dataset."

picks = []

for _ in range(num_examples):

pick = random.randint(0, len(dataset) - 1)

while pick in picks:

pick = random.randint(0, len(dataset) - 1)

picks.append(pick)

df = pd.DataFrame(dataset[picks])

for column, typ in dataset.features.items():

if isinstance(typ, datasets.ClassLabel):

df[column] = df[column].transform(lambda i: typ.names[i])

# display(HTML(df.to_html()))

print(df)

show_random_elements(dataset["train"],12)

输出结果:

label text

0 4 stars Inside the Planet hollywood, walked over from ...

1 1 star I would rate it zero because the manager is a ...

2 4 stars Great beats that cuts thru your soul. A bit ...

3 1 star When making an \"appointment\" you are not tol...

4 2 star Tacos El Gordo is a bit of a zoo, even at 2 am...

5 3 stars I like McAlisters. They are always a good choi...

6 3 stars First thing that attracted my attention when I...

7 3 stars The food was ok. I ordered mozzarella sticks a...

8 3 stars Great place to go after the pool when you need...

9 2 star Thought I'd give this new place a try located ...

10 3 stars Rooms are nice, but no bottle waters?! They sh...

11 1 star One word summary: abysmal. \n\nWow, where do I...

3. 数据预处理

本地数据集,使用 Tokenizer 来处理文本,对于长度不等的输入数据,可以使用填充(padding)和截断(truncation)策略来处理。

Datasets 的 map 方法,支持一次性在整个数据集上应用预处理函数。

下面使用填充到最大长度的策略,处理整个数据集:

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

def tokenize_function(examples):

return tokenizer(examples["text"], padding="max_length", truncation=True)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

padding=“max_length” 表示将样本的长度填充到最大长度,

truncation=True 表示对超过最大长度的样本进行截断

使用 map 方法进行批量处理:dataset.map(tokenize_function, batched=True) 将 tokenize_function 应用于数据集(dataset)中的每个示例,并使用 batched=True 参数表示对数据集进行批量处理

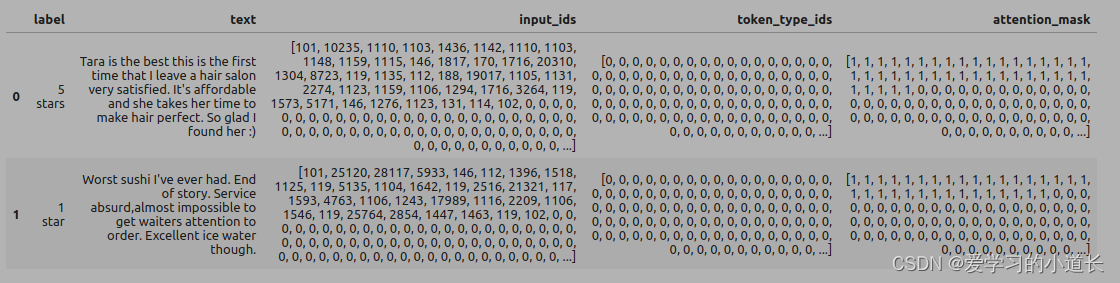

输出结果:

Map: 100%|██████████| 650000/650000 [01:25<00:00, 7601.71 examples/s]

Map: 100%|██████████| 50000/50000 [00:06<00:00, 7505.79 examples/s]

label ... attention_mask

0 1 star ... [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

1 1 star ... [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

[2 rows x 5 columns]

在网页显示:

4 数据抽样

seed = 36 是一个随机种子,用于使洗牌结果可重复

train_dataset = tokenized_datasets["train"].shuffle(seed=36).select(range(100))

eval_dataset = tokenized_datasets["test"].shuffle(seed=36).select(range(100))

huggingface/transformers: 是一个基于 Python 的自然语言处理库,它使用了 PostgreSQL 数据库存储数据。适合用于自然语言处理任务的开发和实现,特别是对于需要使用 Python 和 PostgreSQL 数据库的场景。特点是自然语言处理库、Python、PostgreSQL 数据库。

最近提交(Master分支:6 个月前 )

8eaae6be

* Added Support for Custom Quantization

* Update code

* code reformatted

* Updated Changes

* Updated Changes

---------

Co-authored-by: Mohamed Mekkouri <93391238+MekkCyber@users.noreply.github.com> 1 天前

07182b2e

Flatten the expected slice tensor 1 天前

更多推荐

已为社区贡献9条内容

已为社区贡献9条内容

所有评论(0)