opencv中的cv2..split()和cv2.merge()函数的使用举例

opencv

OpenCV: 开源计算机视觉库

项目地址:https://gitcode.com/gh_mirrors/opencv31/opencv

·

学习资料来源链接: python3+opencv 图像通道的分离(split()函数)和合并(merge()函数)

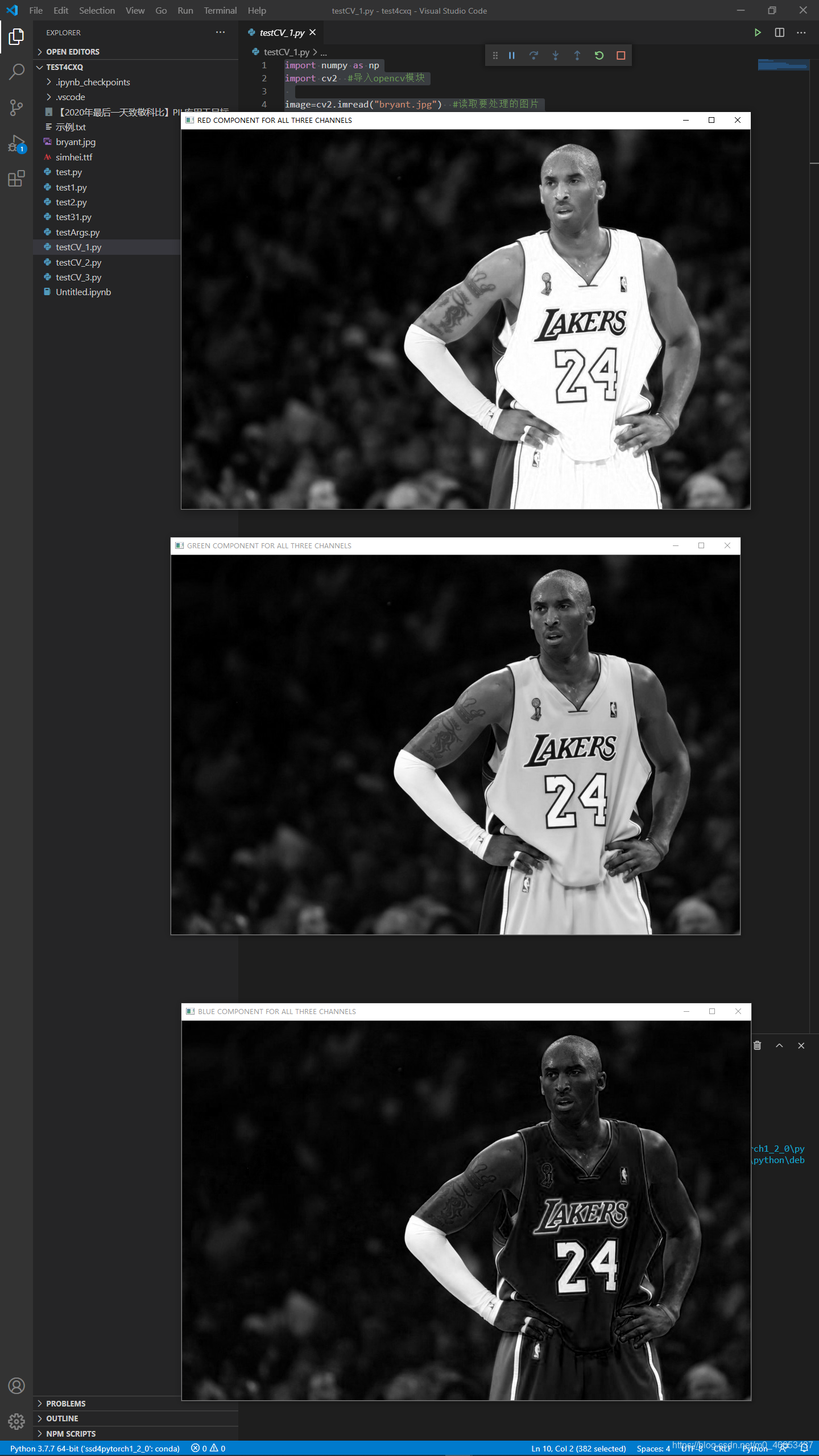

分离图像的三个通道,注意通道的顺序是BGR:

import numpy as np

import cv2 #导入opencv模块

image=cv2.imread("bryant.jpg") #读取要处理的图片

B,G,R = cv2.split(image) #分离出图片的B,R,G颜色通道

cv2.imshow("RED COMPONENT FOR ALL THREE CHANNELS",R) #显示三通道的值都为R值时的图片

cv2.imshow("GREEN COMPONENT FOR ALL THREE CHANNELS",G) #显示三通道的值都为G值时的图片

cv2.imshow("BLUE COMPONENT FOR ALL THREE CHANNELS",B) #显示三通道的值都为B值时的图片

cv2.waitKey(0) #不让程序突然结束

执行结果截图:

分离图像的三个通道,其余通道置零,注意通道的顺序是BGR:

import numpy as np

import cv2 #导入opencv模块

image=cv2.imread("bryant.jpg") #读取要处理的图片

B,G,R = cv2.split(image) #分离出图片的B,R,G颜色通道

zeros = np.zeros(image.shape[:2],dtype="uint8") #创建与image相同大小的零矩阵

cv2.imshow("DISPLAY BLUE COMPONENT",cv2.merge([B,zeros,zeros])) #显示(B,0,0)图像

cv2.imshow("DISPLAY GREEN COMPONENT",cv2.merge([zeros,G,zeros])) #显示(0,G,0)图像

cv2.imshow("DISPLAY RED COMPONENT",cv2.merge([zeros,zeros,R])) #显示(0,0,R)图像

cv2.waitKey(0)

运行结果截图:

将提取出来的三个通道再重新合并回去:

import numpy as np

import cv2 #导入opencv模块

image=cv2.imread("bryant.jpg") #读取要处理的图片

B,G,R = cv2.split(image) #分离出图片的B,R,G颜色通道

cv2.imshow("MERGE RED,GREEN AND BLUE CHANNELS",cv2.merge([B,G,R])) #显示(B,G,R)图像

cv2.waitKey(0)

运行结果截图:

OpenCV: 开源计算机视觉库

最近提交(Master分支:3 个月前 )

ad938734

G-API: Add support to set workload type dynamically in both OpenVINO and ONNX OVEP #27460

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [ ] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

16 小时前

2bca09a1

Include pixel-based confidence in ArUco marker detection #23190

The aim of this pull request is to compute a **pixel-based confidence** of the marker detection. The confidence [0;1] is defined as the percentage of correctly detected pixels, with 1 describing a pixel perfect detection. Currently it is possible to get the normalized Hamming distance between the detected marker and the dictionary ground truth [Dictionary::getDistanceToId()](https://github.com/opencv/opencv/blob/4.x/modules/objdetect/src/aruco/aruco_dictionary.cpp#L114) However, this distance is based on the extracted bits and we lose information in the [majority count step](https://github.com/opencv/opencv/blob/4.x/modules/objdetect/src/aruco/aruco_detector.cpp#L487). For example, even if each cell has 49% incorrect pixels, we still obtain a perfect Hamming distance.

**Implementation tests**: Generate 36 synthetic images containing 4 markers each (with different ids) so a total of 144 markers. Invert a given percentage of pixels in each cell of the marker to simulate uncertain detection. Assuming a perfect detection, define the ground truth uncertainty as the percentage of inverted pixels. The test is passed if `abs(computedConfidece - groundTruthConfidence) < 0.05` where `0.05` accounts for minor detection inaccuracies.

- Performed for both regular and inverted markers

- Included perspective-distorted markers

- Markers in all 4 possible rotations [0, 90, 180, 270]

- Different set of detection params:

- `perspectiveRemovePixelPerCell`

- `perspectiveRemoveIgnoredMarginPerCell`

- `markerBorderBits`

The code properly builds locally and `opencv_test_objdetect` and `opencv_test_core` passed. Please let me know if there are any further modifications needed.

Thanks!

I've also pushed minor unrelated improvement (let me know if you want a separate PR) in the [bit extraction method](https://github.com/opencv/opencv/blob/4.x/modules/objdetect/src/aruco/aruco_detector.cpp#L435). `CV_Assert(perspectiveRemoveIgnoredMarginPerCell <=1)` should be `< 0.5`. Since there are margins on both sides of the cell, the margins must be smaller than half of the cell. When setting `perspectiveRemoveIgnoredMarginPerCell >= 0.5`, `opencv_test_objdetect` fails. Note: 0.499 is ok because `int()` will floor the result, thus `cellMarginPixels = int(cellMarginRate * cellSize)` will be smaller than `cellSize / 2`

### Pull Request Readiness Checklist

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The PR is proposed to the proper branch

- [x] The feature is well documented and sample code can be built with the project CMake

16 小时前

AtomGit 是由开放原子开源基金会联合 CSDN 等生态伙伴共同推出的新一代开源与人工智能协作平台。平台坚持“开放、中立、公益”的理念,把代码托管、模型共享、数据集托管、智能体开发体验和算力服务整合在一起,为开发者提供从开发、训练到部署的一站式体验。

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)