从零开始构造一个Operator(保姆级教程)

文章目录

项目地址:https://github.com/kosmos-io/simple-controller,烦请看官点个赞,感谢

operator是什么

当Kubernetes中的原生资源无法满足我们的业务需求的时候,这个时候我们就需要Operator来创建自己的CRD以及对应Controller。利用CRD定义业务所需要的资源,利用Controller实现CRD所需要的业务逻辑。比如,我们想在Kubernetes中部署一个Nginx并让外界可以访问到,这时我们首先需要创建一个Deployment用来部署Nginx,然后创建一个Service用来将Deployment的Pod的容器端口映射到主机端口。我们觉得太麻烦了,想要创建一次资源就可以同时包含Deployment和Service。

operator如何开发

为了便于我们针对这种场景进行代码开发,社区为我们提供了基于CRD开发控制器的框架controller-runtime,我们可以通过这个框架来控制自己的CRD并执行相应的处理逻辑。当然,社区也为我们提供了脚手架,主要有 kubebuilder 以及 operator-sdk 两个框架,这两者大同小异,都是利用兴趣小组提供的 controller-runtime 项目实现的 Controller 逻辑,不同的是 CRD 资源的创建过程。这里为了更好的了解controller-runtime ,我们就直接基于controller-runtime来编写控制器代码。

本文我们将从零开始开发一个完整的Operator。

CRD需求分析

- 需求背景:当我们在部署一个简单的Webserver到Kubernetes集群中的时候,首先我们需要编写一个Deployment的yaml文件用来拉取Webserver镜像并启动,然后我们会可以通过Service来暴露服务,这样我们每次都需要自己创建两种资源才能将服务真正启动起来,并且支持访问。但是这样是不是太过麻烦?

- 需求说明:创建一个自定义资源对象CRD,通过这个CRD来描述我们需要部署应用的信息,包括镜像地址、容器端口、副本数和服务端口等。然后,当我们去创建这个CRD的时候,相关联的Deployment和Service也会被一起生成。

- 需求解决:

- 创建一个能包含Deployment和Service关键信息的自定义资源(CRD)

- 根据CRD利用kubebuilder编写其控制器controller,来创建对应的Deployment和Service

- 将Operator部署在集群上

# CRD模版,包含了副本数,镜像地址,容器端口,节点端口等

# AppService是我们自定义的资源CRD

apiVersion: kosmos.io/v1

kind: AppService

metadata:

name: appservice-sample

spec:

size: 2

image: nginx:1.7.9

ports:

- port: 80

targetPort: 80

nodePort: 30002

需求解决方法确定了,接下来我们就可以开始实现这个Operator了!

Operator

1. 初始化项目

# 创建项目文件夹

$ mkdir -p github.com/kosmos.io/simple-controller

# 进入项目中

$ cd github.com/kosmos.io/simple-controller

# 初始化go项目

$ go mod init github.com/kosmos.io/simple-controller

go: creating new go.mod: module github.com/kosmos.io/simple-controller

2. 初始化CRD相关文件

创建一个目录,然后初始化CRD相关文件。本文所有目录结构均是参照https://github.com/kosmos-io/kosmos和https://github.com/kubernetes/kubernetes。

# 创建版本为v1的api文件夹

$ mkdir -p pkg/apis/v1

创建doc.go文件,该文件主要是用来包含一些说明,包括API组和版本等。

// 'package' indicates that the generated code should be included at the package level

// 'groupName' indicates the name of the API Group

// +k8s:deepcopy-gen=package,register

// +groupName=kosmos.io

package v1

新建appservice_types.go文件,根据CRD的yaml文件在这里编写相对应的结构体,以完成映射。其中AppService和AppServiceList需要生成runtime.Object方法,故需要添加注解,其余AppServiceSpec和AppServiceStatus他们属于AppService的子属性,不需要生成runtime.Object方法。

这里我们因为要创建

service完成端口映射,故直接使用了corev1.ServicePort。并且将AppService的当前状态使用appsv1.DeploymentStatus来保存。

package v1

import (

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

// You need to edit this file according to your crd configuration

// Note: json tags are required. '+k8s:deepcopy-gen' annotation is required to generate deepcopy files

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// AppService is the Schema for the appservices API

type AppService struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec AppServiceSpec `json:"spec"`

Status AppServiceStatus `json:"status"`

}

// AppServiceSpec is what we need for our spec in crd. Neither it nor AppServiceStatus need to generate runtime.Object

type AppServiceSpec struct {

Size *int32 `json:"size"`

Image string `json:"image"`

Ports []corev1.ServicePort `json:"ports,omitempty"`

}

// AppServiceStatus defines the observed state of AppService

type AppServiceStatus struct {

appsv1.DeploymentStatus `json:",inline"`

}

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// AppServiceList contains a list of AppService

type AppServiceList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata,omitempty"`

Items []AppService `json:"items"`

}

文件创建完成以后,项目目录结构如下:

$ tree

.

├── go.mod

└── pkg

└── apis

└── v1

├── appservice_types.go

└── doc.go

上面我们准备好资源的 API 资源类型后,就可以使用开始生成 CRD 资源的客户端使用的相关代码了。

3. code-generator生成代码

首先创建生成代码的脚本的文件夹,下面这些脚本来源于https://github.com/kosmos-io/kosmos和https://github.com/kubernetes/kubernetes:

$ mkdir hack && cd hack

在该目录下面新建 tools.go 文件,添加 code-generator 依赖,因为在没有代码使用 code-generator 时,go module 默认不会为我们下载此包。文件内容如下所示:

//go:build tools

// +build tools

// This package imports things required by build scripts, to force `go mod` to see them as dependencies

package tools

import _ "k8s.io/code-generator"

然后新建 update-codegen.sh 脚本,用来配置代码生成的脚本:

该脚本会扫描pkg/apis/v1目录下定义的CRD结构体,生成

zz_generated.deepcopy.go和zz_generated.register.go的代码。

zz_generated.deepcopy.go:用于生成深度拷贝(deepcopy)函数,以确保 Kubernetes 能够正确复制对象,包括 CRD 对象和控制器中的其他对象。深度拷贝函数是用于创建对象的副本,以便进行修改和操作,而不影响原始对象。

zz_generated.register.go:用于注册自定义资源定义 (CRD) 和控制器,以便 Kubernetes 知道如何处理它们。这是因为 Kubernetes 需要了解您的自定义资源及其控制器,以能够正确管理它们。

#!/usr/bin/env bash

set -o errexit

set -o nounset

set -o pipefail

GROUP_PACKAGE="github.com"

GO_PACKAGE="${GROUP_PACKAGE}/kosmos.io/simple-controller"

# For all commands, the working directory is the parent directory(repo root).

REPO_ROOT=$(git rev-parse --show-toplevel)

cd "${REPO_ROOT}"

echo "Generating with deepcopy-gen"

GO111MODULE=on go install k8s.io/code-generator/cmd/deepcopy-gen

export GOPATH=$(go env GOPATH | awk -F ':' '{print $1}')

export PATH=$PATH:$GOPATH/bin

function cleanup() {

rm -rf "${REPO_ROOT}/${GROUP_PACKAGE}"

}

trap "cleanup" EXIT SIGINT

cleanup

mkdir -p "$(dirname "${REPO_ROOT}/${GO_PACKAGE}")"

deepcopy-gen \

--input-dirs="github.com/kosmos.io/simple-controller/pkg/apis/v1" \

--output-base="${REPO_ROOT}" \

--output-package="pkg/apis/v1" \

--output-file-base=zz_generated.deepcopy

echo "Generating with register-gen"

GO111MODULE=on go install k8s.io/code-generator/cmd/register-gen

register-gen \

--input-dirs="github.com/kosmos.io/simple-controller/pkg/apis/v1" \

--output-base="${REPO_ROOT}" \

--output-package="pkg/apis/v1" \

--output-file-base=zz_generated.register

mv "${REPO_ROOT}/${GO_PACKAGE}"/pkg/apis/v1/* "${REPO_ROOT}"/pkg/apis/v1

下载依赖

$ go mod tidy

go: finding module for package k8s.io/apimachinery/pkg/apis/meta/v1

go: finding module for package k8s.io/api/apps/v1

go: finding module for package k8s.io/code-generator

go: finding module for package k8s.io/api/core/v1

go: finding module for package sigs.k8s.io/controller-runtime/pkg/scheme

go: finding module for package sigs.k8s.io/controller-runtime/pkg/log

go: found sigs.k8s.io/controller-runtime/pkg/log in sigs.k8s.io/controller-runtime v0.16.3

go: found sigs.k8s.io/controller-runtime/pkg/scheme in sigs.k8s.io/controller-runtime v0.16.3

go: found k8s.io/code-generator in k8s.io/code-generator v0.28.3

go: found k8s.io/api/apps/v1 in k8s.io/api v0.28.3

go: found k8s.io/api/core/v1 in k8s.io/api v0.28.3

go: found k8s.io/apimachinery/pkg/apis/meta/v1 in k8s.io/apimachinery v0.28.3

$ go mod vendor

执行脚本,生成zz_generated.register.go 和zz_generated.deepcopy.go 两个文件

$ ./hack/update-codegen.sh

Generating deepcopy code for 1 targets

整个项目结构如下:

# --prune -I 忽略vendor文件夹

$ tree --prune -I vendor

.

├── go.mod

├── go.sum

├── hack

│ ├── tools.go

│ └── update-codegen.sh

└── pkg

└── apis

└── v1

├── appservice_types.go

├── doc.go

├── zz_generated.register.go

└── zz_generated.deepcopy.go

5 directories, 9 files

4. controller业务逻辑实现

新建文件pkg/controller/appservice_controller.go 来构造我们的controller和核心业务逻辑。

首先我们创建一个基本的 reconciler 结构,包含client(客户端通信)和Scheme(序列化和反序列化方法)。

// AppServiceReconciler reconciles a AppService object

type AppServiceReconciler struct {

client.Client

Scheme *runtime.Scheme

}

然后实现Reconciler 这个接口中的Reconcile方法,这里是我们处理CRD各种事件的具体业务逻辑。req中包含当前标识对象的信息 - 它的名称和命名空间。

说明:

controller-runtime会监控这个资源发生的各种事件(create,update,delete),并将其加入到队列中,最后调用注册在controller中的Do(实现了Reconciler接口)的Reconcile方法。

-

Reconcile核心业务逻辑首先,判断资源是否存在,不存在,则直接创建新的资源,创建新的资源除了需要创建 Deployment 资源外,还需要创建 Service 资源对象。并且我们需要将当前的spec存在

Annotations中,这样我们可以通过去对比新旧对象的声明是否一致,不一致则需要更新,同样的,两种资源都需要更新的。

var (

oldSpecAnnotation = "old/spec"

)

// Reconcile is the core logical part of your controller

// For more details, you can refer to here

// - https://pkg.go.dev/sigs.k8s.io/controller-runtime@v0.16.0/pkg/reconcile

func (r *AppServiceReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

logger := log.FromContext(ctx)

// Get the AppService instance

var appService apisv1.AppService

err := r.Get(ctx, req.NamespacedName, &appService)

if err != nil {

// Ignore when the AppService is deleted

if client.IgnoreNotFound(err) != nil {

return ctrl.Result{}, err

}

return ctrl.Result{}, nil

}

logger.Info("fetch appservice objects: ", "appservice", req.NamespacedName, "yaml", appService)

// If no, create an associated resource

// If yes, determine whether to update it

deploy := &appsv1.Deployment{}

if err := r.Get(ctx, req.NamespacedName, deploy); err != nil && errors.IsNotFound(err) {

// 1. Related Annotations

data, _ := json.Marshal(appService.Spec)

if appService.Annotations != nil {

appService.Annotations[oldSpecAnnotation] = string(data)

} else {

appService.Annotations = map[string]string{oldSpecAnnotation: string(data)}

}

if err := retry.RetryOnConflict(retry.DefaultRetry, func() error {

return r.Client.Update(ctx, &appService)

}); err != nil {

return ctrl.Result{}, err

}

// Creating Associated Resources

// 2. Create Deployment

deploy := resources.NewDeploy(&appService)

if err := r.Client.Create(ctx, deploy); err != nil {

return ctrl.Result{}, err

}

// 3. Create Service

service := resources.NewService(&appService)

if err := r.Create(ctx, service); err != nil {

return ctrl.Result{}, err

}

return ctrl.Result{}, nil

}

// Gets the spec of the original AppService object

oldspec := apisv1.AppServiceSpec{}

if err := json.Unmarshal([]byte(appService.Annotations[oldSpecAnnotation]), &oldspec); err != nil {

return ctrl.Result{}, err

}

// The current spec is inconsistent with the old object and needs to be updated

// Otherwise return

if !reflect.DeepEqual(appService.Spec, oldspec) {

// Update associated resources

newDeploy := resources.NewDeploy(&appService)

oldDeploy := &appsv1.Deployment{}

if err := r.Get(ctx, req.NamespacedName, oldDeploy); err != nil {

return ctrl.Result{}, err

}

oldDeploy.Spec = newDeploy.Spec

if err := retry.RetryOnConflict(retry.DefaultRetry, func() error {

return r.Client.Update(ctx, oldDeploy)

}); err != nil {

return ctrl.Result{}, err

}

newService := resources.NewService(&appService)

oldService := &corev1.Service{}

if err := r.Get(ctx, req.NamespacedName, oldService); err != nil {

return ctrl.Result{}, err

}

// You need to specify the ClusterIP to the previous one; otherwise, an error will be reported during the update

newService.Spec.ClusterIP = oldService.Spec.ClusterIP

oldService.Spec = newService.Spec

if err := retry.RetryOnConflict(retry.DefaultRetry, func() error {

return r.Client.Update(ctx, oldService)

}); err != nil {

return ctrl.Result{}, err

}

}

return ctrl.Result{}, nil

}

将我们的AppServiceReconciler 添加到manager中,For注册了我们的CRD资源。SetupWithManager在main.go中被调用,从而将controller在manager中创建注册。

// SetupWithManager sets up the controller with the Manager.

func (r *AppServiceReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&apisv1.AppService{}).

Complete(r)

}

另外两个核心的配套方法就是 resources.NewDeploy() 和 resources.NewService() 方法。这两个方法是根据CRD的声明,生成对应的Deployment和Service资源对象的配置文件。

在controller包下面新建resources文件夹,创建文件。

NewDeploy 实现如下:

package resources

import (

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime/schema"

apisv1 "github.com/kosmos.io/simple-controller/pkg/apis/v1"

)

func NewDeploy(app *apisv1.AppService) *appsv1.Deployment {

labels := map[string]string{"app": app.Name}

selector := &metav1.LabelSelector{MatchLabels: labels}

return &appsv1.Deployment{

TypeMeta: metav1.TypeMeta{

APIVersion: "apps/v1",

Kind: "Deployment",

},

ObjectMeta: metav1.ObjectMeta{

Name: app.Name,

Namespace: app.Namespace,

OwnerReferences: []metav1.OwnerReference{

*metav1.NewControllerRef(app, schema.GroupVersionKind{

Group: app.GroupVersionKind().Group,

Version: app.GroupVersionKind().Version,

Kind: app.GroupVersionKind().Kind,

}),

},

},

Spec: appsv1.DeploymentSpec{

Replicas: app.Spec.Size,

Template: corev1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: labels,

},

Spec: corev1.PodSpec{

Containers: newContainers(app),

},

},

Selector: selector,

},

}

}

func newContainers(app *apisv1.AppService) []corev1.Container {

var containerPorts []corev1.ContainerPort

for _, svcPort := range app.Spec.Ports {

cport := corev1.ContainerPort{}

cport.ContainerPort = svcPort.TargetPort.IntVal

containerPorts = append(containerPorts, cport)

}

return []corev1.Container{

{

Name: app.Name,

Image: app.Spec.Image,

Ports: containerPorts,

ImagePullPolicy: corev1.PullIfNotPresent,

},

}

}

NewService 实现如下:

package resources

import (

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime/schema"

apisv1 "github.com/kosmos.io/simple-controller/pkg/apis/v1"

)

func NewService(app *apisv1.AppService) *corev1.Service {

return &corev1.Service{

TypeMeta: metav1.TypeMeta{

Kind: "Service",

APIVersion: "v1",

},

ObjectMeta: metav1.ObjectMeta{

Name: app.Name,

Namespace: app.Namespace,

OwnerReferences: []metav1.OwnerReference{

*metav1.NewControllerRef(app, schema.GroupVersionKind{

Group: app.GroupVersionKind().Group,

Version: app.GroupVersionKind().Version,

Kind: app.GroupVersionKind().Kind,

}),

},

},

Spec: corev1.ServiceSpec{

Type: corev1.ServiceTypeNodePort,

Ports: app.Spec.Ports,

Selector: map[string]string{

"app": app.Name,

},

},

}

}

OK,AppService 的控制器逻辑已经实现完成了。

5. manager启动

新建文件cmd/main.go 启动manager并将controller注册进去

package main

import (

"os"

ctrl "sigs.k8s.io/controller-runtime"

"sigs.k8s.io/controller-runtime/pkg/log/zap"

apisv1 "github.com/kosmos.io/simple-controller/pkg/apis/v1"

"github.com/kosmos.io/simple-controller/pkg/controller"

)

var (

setupLog = ctrl.Log.WithName("setup")

)

func main() {

ctrl.SetLogger(zap.New())

setupLog.Info("starting manager")

mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{})

if err != nil {

setupLog.Error(err, "unable to start manager")

os.Exit(1)

}

// in a real controller, we'd create a new scheme for this

if err = apisv1.AddToScheme(mgr.GetScheme()); err != nil {

setupLog.Error(err, "unable to add scheme")

os.Exit(1)

}

if err = (&controller.AppServiceReconciler{

Client: mgr.GetClient(),

Scheme: mgr.GetScheme(),

}).SetupWithManager(mgr); err != nil {

setupLog.Error(err, "unable to create controller", "controller", "AppService")

os.Exit(1)

}

setupLog.Info("starting manager")

if err := mgr.Start(ctrl.SetupSignalHandler()); err != nil {

setupLog.Error(err, "problem running manager")

os.Exit(1)

}

}

6. 本地安装调试

首先,添加crd文件deploy/crd.yaml 在这里

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: appservices.kosmos.io

spec:

group: kosmos.io

names:

kind: AppService

listKind: AppServiceList

plural: appservices

singular: appservice

scope: Namespaced

versions:

- name: v1

schema:

openAPIV3Schema:

description: AppService is the Schema for the appservices API

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this representation

of an object. Servers should convert recognized schemas to the latest

internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this

object represents. Servers may infer this from the endpoint the client

submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: AppServiceSpec defines the desired state of AppService

properties:

image:

type: string

ports:

items:

description: ServicePort contains information on service's port.

properties:

appProtocol:

description: "The application protocol for this port. This is

used as a hint for implementations to offer richer behavior

for protocols that they understand. This field follows standard

Kubernetes label syntax. Valid values are either: \n * Un-prefixed

protocol names - reserved for IANA standard service names

(as per RFC-6335 and https://www.iana.org/assignments/service-names).

\n * Kubernetes-defined prefixed names: * 'kubernetes.io/h2c'

- HTTP/2 over cleartext as described in https://www.rfc-editor.org/rfc/rfc7540

* 'kubernetes.io/ws' - WebSocket over cleartext as described

in https://www.rfc-editor.org/rfc/rfc6455 * 'kubernetes.io/wss'

- WebSocket over TLS as described in https://www.rfc-editor.org/rfc/rfc6455

\n * Other protocols should use implementation-defined prefixed

names such as mycompany.com/my-custom-protocol."

type: string

name:

description: The name of this port within the service. This

must be a DNS_LABEL. All ports within a ServiceSpec must have

unique names. When considering the endpoints for a Service,

this must match the 'name' field in the EndpointPort. Optional

if only one ServicePort is defined on this service.

type: string

nodePort:

description: 'The port on each node on which this service is

exposed when type is NodePort or LoadBalancer. Usually assigned

by the system. If a value is specified, in-range, and not

in use it will be used, otherwise the operation will fail. If

not specified, a port will be allocated if this Service requires

one. If this field is specified when creating a Service which

does not need it, creation will fail. This field will be wiped

when updating a Service to no longer need it (e.g. changing

type from NodePort to ClusterIP). More info: https://kubernetes.io/docs/concepts/services-networking/service/#type-nodeport'

format: int32

type: integer

port:

description: The port that will be exposed by this service.

format: int32

type: integer

protocol:

default: TCP

description: The IP protocol for this port. Supports "TCP",

"UDP", and "SCTP". Default is TCP.

type: string

targetPort:

anyOf:

- type: integer

- type: string

description: 'Number or name of the port to access on the pods

targeted by the service. Number must be in the range 1 to

65535. Name must be an IANA_SVC_NAME. If this is a string,

it will be looked up as a named port in the target Pod''s

container ports. If this is not specified, the value of the

''port'' field is used (an identity map). This field is ignored

for services with clusterIP=None, and should be omitted or

set equal to the ''port'' field. More info: https://kubernetes.io/docs/concepts/services-networking/service/#defining-a-service'

x-kubernetes-int-or-string: true

required:

- port

type: object

type: array

size:

description: Foo is an example field of AppService. Edit appservice_types.go

to remove/update Foo string `json:"foo,omitempty"`

format: int32

type: integer

required:

- image

- size

type: object

status:

description: AppServiceStatus defines the observed state of AppService

properties:

availableReplicas:

description: Total number of available pods (ready for at least minReadySeconds)

targeted by this deployment.

format: int32

type: integer

collisionCount:

description: Count of hash collisions for the Deployment. The Deployment

controller uses this field as a collision avoidance mechanism when

it needs to create the name for the newest ReplicaSet.

format: int32

type: integer

conditions:

description: Represents the latest available observations of a deployment's

current state.

items:

description: DeploymentCondition describes the state of a deployment

at a certain point.

properties:

lastTransitionTime:

description: Last time the condition transitioned from one status

to another.

format: date-time

type: string

lastUpdateTime:

description: The last time this condition was updated.

format: date-time

type: string

message:

description: A human readable message indicating details about

the transition.

type: string

reason:

description: The reason for the condition's last transition.

type: string

status:

description: Status of the condition, one of True, False, Unknown.

type: string

type:

description: Type of deployment condition.

type: string

required:

- status

- type

type: object

type: array

observedGeneration:

description: The generation observed by the deployment controller.

format: int64

type: integer

readyReplicas:

description: readyReplicas is the number of pods targeted by this

Deployment with a Ready Condition.

format: int32

type: integer

replicas:

description: Total number of non-terminated pods targeted by this

deployment (their labels match the selector).

format: int32

type: integer

unavailableReplicas:

description: Total number of unavailable pods targeted by this deployment.

This is the total number of pods that are still required for the

deployment to have 100% available capacity. They may either be pods

that are running but not yet available or pods that still have not

been created.

format: int32

type: integer

updatedReplicas:

description: Total number of non-terminated pods targeted by this

deployment that have the desired template spec.

format: int32

type: integer

type: object

type: object

served: true

storage: true

subresources:

status: {}

添加一个示例文件用于测试example/example_appservice.yaml的文件

apiVersion: kosmos.io/v1

kind: AppService

metadata:

name: appservice-sample

spec:

size: 2

image: nginx:1.7.9

ports:

- port: 80

targetPort: 80

nodePort: 30002

如果我们本地有一个可以访问的 Kubernetes 集群,我们可以直接进行调试,在本地用户 ~/.kube/config 文件中配置集群访问信息,下面的信息表明可以访问的 Kubernetes 集群:

$ kubectl cluster-info

Kubernetes control plane is running at https://36.x.x.x:6443

coredns is running at https://36.x.x.x:6443/api/v1/namespaces/kube-system/services/coredns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

首先,需要在集群中安装 CRD 对象:

$ kubectl apply -f deploy/crd.yaml

customresourcedefinition.apiextensions.k8s.io/appservices.kosmos.io created

当我们通过 kubectl get crd 命令获取到我们定义的 CRD 资源对象,就证明我们定义的 CRD 安装成功了。

$ kubectl get crd

NAME CREATED AT

appservices.kosmos.io 2023-11-01T06:46:57Z

在本地启动Operator 执行main.go:

GOROOT=/opt/homebrew/Cellar/go/1.21.3/libexec #gosetup

GOPATH=/Users/george/go #gosetup

/opt/homebrew/Cellar/go/1.21.3/libexec/bin/go build -o /private/var/folders/dn/nx3mk3qd0rg38j89vq1r2b3r0000gn/T/GoLand/___go_build_github_com_kosmos_io_simple_controller_cmd github.com/kosmos.io/simple-controller/cmd #gosetup

/private/var/folders/dn/nx3mk3qd0rg38j89vq1r2b3r0000gn/T/GoLand/___go_build_github_com_kosmos_io_simple_controller_cmd

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"setup","msg":"starting manager"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"controller-runtime.builder","msg":"skip registering a mutating webhook, object does not implement admission.Defaulter or WithDefaulter wasn't called","GVK":"kosmos.io/v1, Kind=AppService"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"controller-runtime.builder","msg":"skip registering a validating webhook, object does not implement admission.Validator or WithValidator wasn't called","GVK":"kosmos.io/v1, Kind=AppService"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"setup","msg":"starting manager"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"controller-runtime.metrics","msg":"Starting metrics server"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","msg":"Starting EventSource","controller":"appservice","controllerGroup":"kosmos.io","controllerKind":"AppService","source":"kind source: *v1.AppService"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","msg":"Starting Controller","controller":"appservice","controllerGroup":"kosmos.io","controllerKind":"AppService"}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","logger":"controller-runtime.metrics","msg":"Serving metrics server","bindAddress":":8080","secure":false}

{"level":"info","ts":"2023-11-01T14:52:30+08:00","msg":"Starting workers","controller":"appservice","controllerGroup":"kosmos.io","controllerKind":"AppService","worker count":1}

上面的命令会在本地运行 Operator 去监控Kubernetes集群的AppService这个CRD资源,通过 ~/.kube/config 去关联集群信息,现在我们去添加一个 AppService 类型的资源然后观察本地 Operator 的变化情况,资源清单文件就是我们上面预定义的(example/example_appservice.yaml):

创建CRD对象:

$ kubectl apply -f example/example_appservice.yaml

appservice.kosmos.io/appservice-sample created

这个时候我们可以在控制器运行终端中看到对应的事件信息:

{"level":"info","ts":"2023-11-01T14:54:25+08:00","msg":"fetch appservice objects: ","controller":"appservice","controllerGroup":"kosmos.io","controllerKind":"AppService","AppService":{"name":"appservice-sample","namespace":"default"},"namespace":"default","name":"appservice-sample","reconcileID":"2871d06d-9763-4795-9864-282683b60656","appservice":{"name":"appservice-sample","namespace":"default"},"yaml":{"kind":"AppService","apiVersion":"kosmos.io/v1","metadata":{"name":"appservice-sample","namespace":"default","uid":"06ca6134-8072-4d04-bdac-06bc3dffcc2b","resourceVersion":"1696988","generation":1,"creationTimestamp":"2023-11-01T06:53:38Z","annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"kosmos.io/v1\",\"kind\":\"AppService\",\"metadata\":{\"annotations\":{},\"name\":\"appservice-sample\",\"namespace\":\"default\"},\"spec\":{\"image\":\"nginx:1.7.9\",\"ports\":[{\"nodePort\":30002,\"port\":80,\"targetPort\":80}],\"size\":2}}\n"},"managedFields":[{"manager":"kubectl-client-side-apply","operation":"Update","apiVersion":"kosmos.io/v1","time":"2023-11-01T06:53:38Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{".":{},"f:kubectl.kubernetes.io/last-applied-configuration":{}}},"f:spec":{".":{},"f:image":{},"f:ports":{},"f:size":{}}}}]},"spec":{"size":2,"image":"nginx:1.7.9","ports":[{"protocol":"TCP","port":80,"targetPort":80,"nodePort":30002}]},"status":{}}}

{"level":"info","ts":"2023-11-01T14:54:25+08:00","msg":"fetch appservice objects: ","controller":"appservice","controllerGroup":"kosmos.io","controllerKind":"AppService","AppService":{"name":"appservice-sample","namespace":"default"},"namespace":"default","name":"appservice-sample","reconcileID":"c319df94-4941-4fe5-a7ac-a9d72f85861d","appservice":{"name":"appservice-sample","namespace":"default"},"yaml":{"kind":"AppService","apiVersion":"kosmos.io/v1","metadata":{"name":"appservice-sample","namespace":"default","uid":"06ca6134-8072-4d04-bdac-06bc3dffcc2b","resourceVersion":"1696989","generation":1,"creationTimestamp":"2023-11-01T06:53:38Z","annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"kosmos.io/v1\",\"kind\":\"AppService\",\"metadata\":{\"annotations\":{},\"name\":\"appservice-sample\",\"namespace\":\"default\"},\"spec\":{\"image\":\"nginx:1.7.9\",\"ports\":[{\"nodePort\":30002,\"port\":80,\"targetPort\":80}],\"size\":2}}\n","old/spec":"{\"size\":2,\"image\":\"nginx:1.7.9\",\"ports\":[{\"protocol\":\"TCP\",\"port\":80,\"targetPort\":80,\"nodePort\":30002}]}"},"managedFields":[{"manager":"___go_build_github_com_kosmos_io_simple_controller_cmd","operation":"Update","apiVersion":"kosmos.io/v1","time":"2023-11-01T06:53:38Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{"f:old/spec":{}}}}},{"manager":"kubectl-client-side-apply","operation":"Update","apiVersion":"kosmos.io/v1","time":"2023-11-01T06:53:38Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{".":{},"f:kubectl.kubernetes.io/last-applied-configuration":{}}},"f:spec":{".":{},"f:image":{},"f:ports":{},"f:size":{}}}}]},"spec":{"size":2,"image":"nginx:1.7.9","ports":[{"protocol":"TCP","port":80,"targetPort":80,"nodePort":30002}]},"status":{}}}

然后我们可以去查看集群中是否有符合我们预期的资源出现:

$ kubectl get AppService

NAME AGE

appservice-sample 2m24s

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

appservice-sample 2/2 2 2 2m29s

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

appservice-sample NodePort * <none> 80:30002/TCP 2m36s

kubernetes ClusterIP * <none> 443/TCP 4d21h

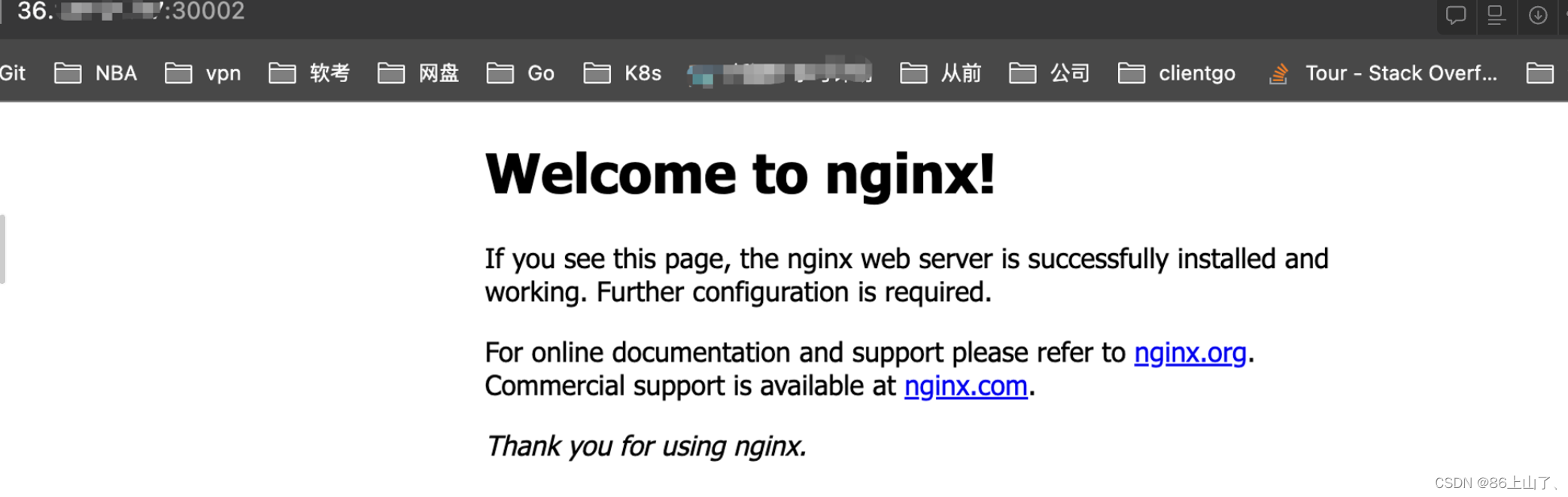

然后,我们通过主机IP+30002端口通过Service去访问Pod:

7. 部署在集群上

当前,我们可以在本机上去运行Operator来控制管理AppService资源,但是如果我们在本地停止运行了,那么就无法监控该资源了。但是如果我们将Operator作为一个应用部署在K8s,就可以在集群上运行,监控资源了。

首先需要构建并推送镜像到镜像服务仓库:

构建镜像我们需要创建一个Dockerfile文件

# Build the manager binary

FROM golang:1.21 as builder

ARG TARGETOS

ARG TARGETARCH

WORKDIR /workspace

# Copy the Go Modules manifests

COPY go.mod go.mod

COPY go.sum go.sum

# cache deps before building and copying source so that we don't need to re-download as much

# and so that source changes don't invalidate our downloaded layer

RUN go mod download

# Copy the go source

COPY cmd/main.go cmd/main.go

COPY pkg/apis pkg/apis

COPY pkg/controller pkg/controller

# Build

# the GOARCH has not a default value to allow the binary be built according to the host where the command

# was called. For example, if we call make docker-build in a local env which has the Apple Silicon M1 SO

# the docker BUILDPLATFORM arg will be linux/arm64 when for Apple x86 it will be linux/amd64. Therefore,

# by leaving it empty we can ensure that the container and binary shipped on it will have the same platform.

RUN CGO_ENABLED=0 GOOS=${TARGETOS:-linux} GOARCH=${TARGETARCH} go build -a -o operator cmd/main.go

# Use distroless as minimal base image to package the manager binary

# Refer to https://github.com/GoogleContainerTools/distroless for more details

FROM gcr.io/distroless/static:nonroot

WORKDIR /

COPY --from=builder /workspace/operator .

USER 65532:65532

ENTRYPOINT ["/operator"]

然后创建Makefile文件,去执行build镜像的脚本。

IMG ?= controller:latest

CONTAINER_TOOL ?= docker

# Build and push docker image for the operator for cross-platform support

PLATFORMS ?= linux/arm64,linux/amd64,linux/s390x,linux/ppc64le

.PHONY: docker-buildx

docker-buildx:

# copy existing Dockerfile and insert --platform=${BUILDPLATFORM} into Dockerfile.cross, and preserve the original Dockerfile

sed -e '1 s/\(^FROM\)/FROM --platform=\$$\{BUILDPLATFORM\}/; t' -e ' 1,// s//FROM --platform=\$$\{BUILDPLATFORM\}/' Dockerfile > Dockerfile.cross

- $(CONTAINER_TOOL) buildx create --name project-v3-builder

$(CONTAINER_TOOL) buildx use project-v3-builder

- $(CONTAINER_TOOL) buildx build --push --platform=$(PLATFORMS) --tag ${IMG} -f Dockerfile.cross .

- $(CONTAINER_TOOL) buildx rm project-v3-builder

rm Dockerfile.cross

这时候我们就可以在项目的根目录下执行构建镜像指令了:

# your-registry可是你自己的dockerhub名字,当然也可以去申请镜像服务仓库

# project-name一般为你项目名称

# PLATFORMS为你想要基于哪种架构打包镜像

$ make docker-buildx IMG=<your-registry>/<project-name>:<tag, version> PLATFORMS=<linux/amd64, your Server Architecture>

这时候我们的Operator作为镜像推送到了指定镜像仓库了,接下来我们就创建想的资源来拉取启动这个镜像了。

创建deploy/deploy.yaml,来拉取Operator镜像并执行,同时关联ServiceAccount。

apiVersion: apps/v1

kind: Deployment

metadata:

name: controller-operator

namespace: default

spec:

replicas: 1

selector:

matchLabels:

control-plane: controller-manager

template:

metadata:

labels:

control-plane: controller-manager

spec:

serviceAccountName: controller-manager

containers:

- name: operator

image: <your-registry>/<project-name>:<tag, version>

imagePullPolicy: Always

command:

- /operator

resources:

limits:

memory: 200Mi

cpu: 250m

requests:

cpu: 100m

memory: 200Mi

ServiceAccount和ClusterRole(指定了你可以操作集群上的那些资源)通过ClusterRoleBinding关联。(均在deploy目录下)

# ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: manager-role

rules:

- apiGroups: ['*']

resources: ['*']

verbs: ["*"]

- nonResourceURLs: ['*']

verbs: ["get"]

# ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: manager-rolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: manager-role

subjects:

- kind: ServiceAccount

name: controller-manager

namespace: default

# ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: controller-manager

namespace: default

最终的项目结构为:

$ tree --prune -I vendor

.

├── Dockerfile

├── Makefile

├── cmd

│ └── main.go

├── deploy

│ ├── crd.yaml

│ ├── deploy.yaml

│ ├── role.yaml

│ ├── role_binding.yaml

│ └── service_account.yaml

├── example

│ └── example_appservice.yaml

├── go.mod

├── go.sum

├── hack

│ ├── tools.go

│ └── update-codegen.sh

└── pkg

├── apis

│ └── v1

│ ├── appservice_types.go

│ ├── doc.go

│ ├── zz_generated.deepcopy.go

│ └── zz_generated.register.go

└── controller

├── appservice_controller.go

└── resources

├── NewDeploy.go

└── newService.go

10 directories, 21 files

在集群上部署Operator

# 部署资源

$ kubectl apply -f deploy/

customresourcedefinition.apiextensions.k8s.io/appservices.kosmos.io created

deployment.apps/controller-operator created

clusterrole.rbac.authorization.k8s.io/manager-role created

clusterrolebinding.rbac.authorization.k8s.io/manager-rolebinding created

serviceaccount/controller-manager created

# 查看Operator状态

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

controller-operator 1/1 1 1 53s

# 创建实例

$ kubectl apply -f example/

appservice.kosmos.io/appservice-sample created

# 查看AppService状态

$ kubectl get AppService

NAME AGE

appservice-sample 50s

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

appservice-sample 2/2 2 2 39s

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

appservice-sample NodePort * <none> 80:30002/TCP 52s

至此,Operator已经成功部署到集群上,并可控制管理AppService资源。

8. 卸载清除资源

- 如果你是在本地启动的项目,卸载很简单:

- 终止controller程序

- 卸载CRD资源

$ kubectl delete -f example/example_appservice.yaml

appservice.kosmos.io "appservice-sample" deleted

- 如果你是在集群部署的项目,卸载也很简单:

- 卸载CRD实例

- 删除CRD资源和Operator及相关资源

$ kubectl delete -f example/example_appservice.yaml

appservice.kosmos.io "appservice-sample" deleted

$ kubectl delete -f deploy/

customresourcedefinition.apiextensions.k8s.io "appservices.kosmos.io" deleted

deployment.apps "controller-operator" deleted

clusterrole.rbac.authorization.k8s.io "manager-role" deleted

clusterrolebinding.rbac.authorization.k8s.io "manager-rolebinding" deleted

serviceaccount "controller-manager" deleted

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)