记一次搭建Hbase伪分布式环境时遇到的坑,大坑,出现“ERROR: KeeperErrorCode = NoNode for /hbase/master”

环境:ubuntu1804,hadoop2.7.7,hbase2.1.1在参考网上的步骤配置好环境变量HBASE_HOME,PATH2.

环境:ubuntu1804,hadoop2.7.7,hbase2.1.1

在参考网上的步骤:

1.配置好环境变量HBASE_HOME,PATH

2.使用自带的zookeeper,修改hbase-env.sh + hbase-site.xml + regionservers后

3.执行start-hbase.sh 启动hbase

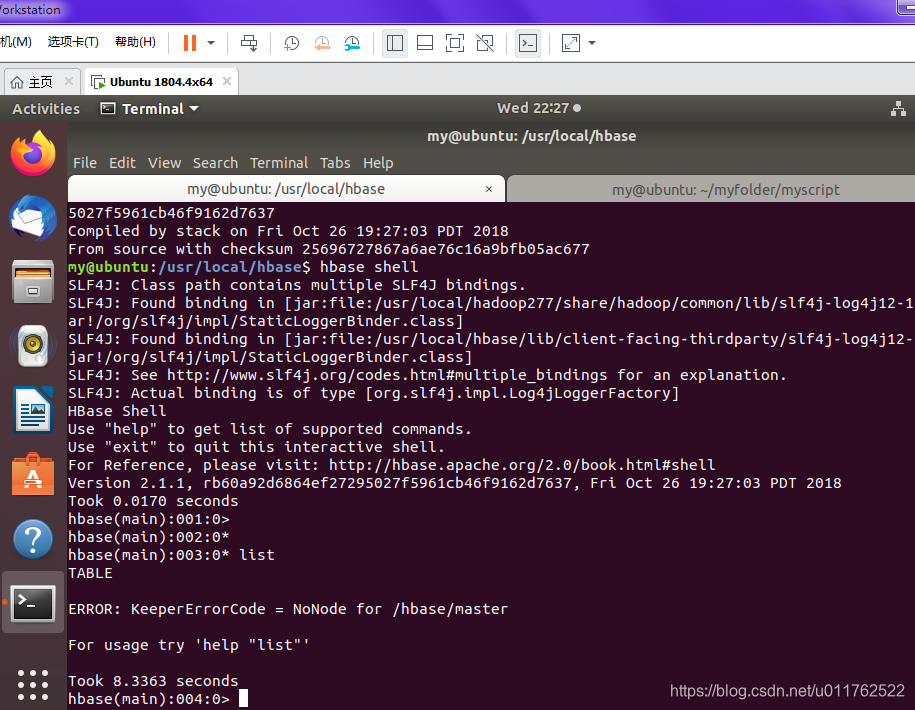

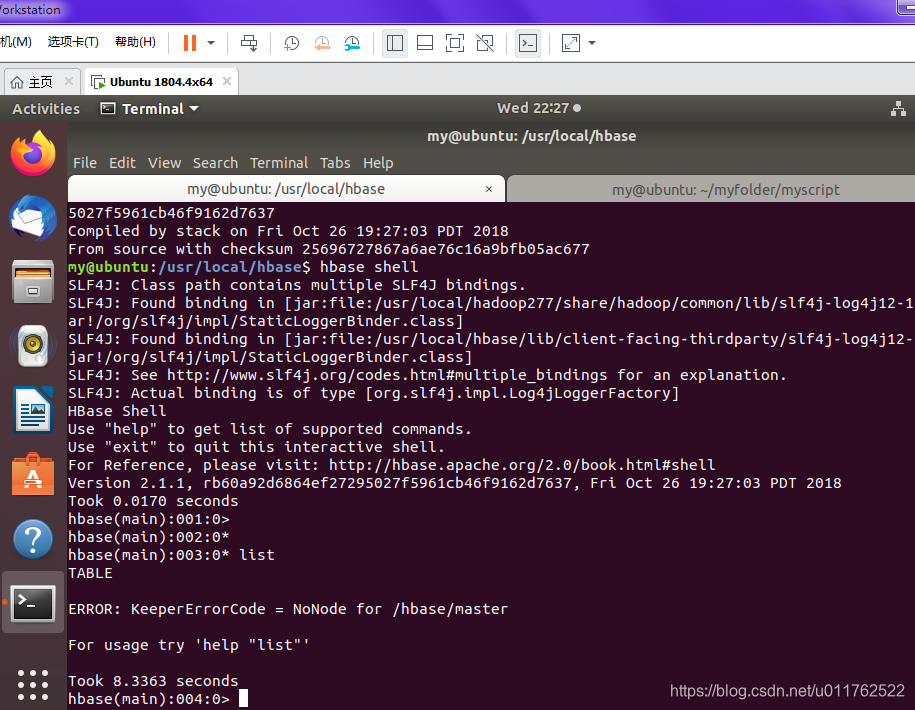

hbase shell进入命令行环境,却出现“ERROR: KeeperErrorCode = NoNode for /hbase/master”的错误,如下图:

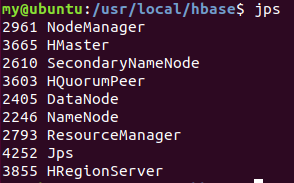

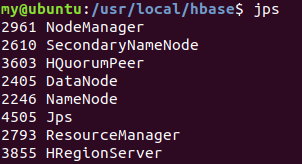

同时查看进程情况发现Hmaster 启动几秒后自动消失

几秒后Hmaster自动关闭:

查看hbase启动log文件,在hbase安装目录下的logs里找到了hbase-my-master-ubuntu.log

即

2021-06-12 16:34:25,501 INFO [main] zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.72.130:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.PendingWatcher@4dc8c0ea

2021-06-12 16:34:25,521 INFO [main-SendThread(192.168.72.130:2181)] zookeeper.ClientCnxn: Opening socket connection to server 192.168.72.130/192.168.72.130:2181. Will not attempt to authenticate using SASL (unknown error)

2021-06-12 16:34:25,528 WARN [main-SendThread(192.168.72.130:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:714)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1141)

2021-06-12 16:34:26,633 INFO [main-SendThread(192.168.72.130:2181)] zookeeper.ClientCnxn: Opening socket connection to server 192.168.72.130/192.168.72.130:2181. Will not attempt to authenticate using SASL (unknown error)

2021-06-12 16:34:26,635 INFO [main-SendThread(192.168.72.130:2181)] zookeeper.ClientCnxn: Socket connection established to 192.168.72.130/192.168.72.130:2181, initiating session

2021-06-12 16:34:26,673 INFO [main-SendThread(192.168.72.130:2181)] zookeeper.ClientCnxn: Session establishment complete on server 192.168.72.130/192.168.72.130:2181, sessionid = 0x179ff5ba35d0000, negotiated timeout = 90000

2021-06-12 16:34:26,850 INFO [main] util.log: Logging initialized @5333ms

2021-06-12 16:34:26,989 INFO [main] http.HttpRequestLog: Http request log for http.requests.master is not defined

2021-06-12 16:34:27,010 INFO [main] http.HttpServer: Added global filter 'safety' (class=org.apache.hadoop.hbase.http.HttpServer$QuotingInputFilter)

2021-06-12 16:34:27,011 INFO [main] http.HttpServer: Added global filter 'clickjackingprevention' (class=org.apache.hadoop.hbase.http.ClickjackingPreventionFilter)

2021-06-12 16:34:27,016 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context master

2021-06-12 16:34:27,017 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2021-06-12 16:34:27,017 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2021-06-12 16:34:27,054 INFO [main] http.HttpServer: Jetty bound to port 16010

2021-06-12 16:34:27,055 INFO [main] server.Server: jetty-9.3.19.v20170502

2021-06-12 16:34:27,107 INFO [main] handler.ContextHandler: Started o.e.j.s.ServletContextHandler@11a8042c{/logs,file:///usr/local/hbase/logs/,AVAILABLE}

2021-06-12 16:34:27,107 INFO [main] handler.ContextHandler: Started o.e.j.s.ServletContextHandler@69391e08{/static,file:///usr/local/hbase/hbase-webapps/static/,AVAILABLE}

2021-06-12 16:34:27,299 INFO [main] handler.ContextHandler: Started o.e.j.w.WebAppContext@5e519ad3{/,file:///usr/local/hbase/hbase-webapps/master/,AVAILABLE}{file:/usr/local/hbase/hbase-webapps/master}

2021-06-12 16:34:27,307 INFO [main] server.AbstractConnector: Started ServerConnector@4f49dd2a{HTTP/1.1,[http/1.1]}{0.0.0.0:16010}

2021-06-12 16:34:27,308 INFO [main] server.Server: Started @5791ms

2021-06-12 16:34:27,311 INFO [main] master.HMaster: hbase.rootdir=hdfs://192.168.72.130:9000/hbase, hbase.cluster.distributed=true

2021-06-12 16:34:27,358 INFO [master/ubuntu:16000:becomeActiveMaster] master.HMaster: Adding backup master ZNode /hbase/backup-masters/ubuntu,16000,1623486862761

2021-06-12 16:34:27,496 INFO [master/ubuntu:16000:becomeActiveMaster] master.ActiveMasterManager: Deleting ZNode for /hbase/backup-masters/ubuntu,16000,1623486862761 from backup master directory

2021-06-12 16:34:27,503 INFO [master/ubuntu:16000:becomeActiveMaster] master.ActiveMasterManager: Registered as active master=ubuntu,16000,1623486862761

2021-06-12 16:34:27,514 INFO [master/ubuntu:16000:becomeActiveMaster] regionserver.ChunkCreator: Allocating data MemStoreChunkPool with chunk size 2 MB, max count 161, initial count 0

2021-06-12 16:34:27,523 INFO [master/ubuntu:16000:becomeActiveMaster] regionserver.ChunkCreator: Allocating index MemStoreChunkPool with chunk size 204.80 KB, max count 179, initial count 0

2021-06-12 16:34:27,595 ERROR [master/ubuntu:16000:becomeActiveMaster] master.HMaster: Failed to become active master

java.net.ConnectException: Call From ubuntu/192.168.72.130 to ubuntu:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732)

at org.apache.hadoop.ipc.Client.call(Client.java:1480)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy18.setSafeMode(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.setSafeMode(ClientNamenodeProtocolTranslatorPB.java:671)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy19.setSafeMode(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:372)

at com.sun.proxy.$Proxy20.setSafeMode(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.setSafeMode(DFSClient.java:2610)

at org.apache.hadoop.hdfs.DistributedFileSystem.setSafeMode(DistributedFileSystem.java:1223)

at org.apache.hadoop.hdfs.DistributedFileSystem.setSafeMode(DistributedFileSystem.java:1207)

at org.apache.hadoop.hbase.util.FSUtils.isInSafeMode(FSUtils.java:293)

at org.apache.hadoop.hbase.util.FSUtils.waitOnSafeMode(FSUtils.java:699)

at org.apache.hadoop.hbase.master.MasterFileSystem.checkRootDir(MasterFileSystem.java:250)

at org.apache.hadoop.hbase.master.MasterFileSystem.createInitialFileSystemLayout(MasterFileSystem.java:151)

at org.apache.hadoop.hbase.master.MasterFileSystem.<init>(MasterFileSystem.java:122)

at org.apache.hadoop.hbase.master.HMaster.finishActiveMasterInitialization(HMaster.java:864)

at org.apache.hadoop.hbase.master.HMaster.startActiveMasterManager(HMaster.java:2254)

at org.apache.hadoop.hbase.master.HMaster.lambda$run$0(HMaster.java:583)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:714)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:615)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:713)

at org.apache.hadoop.ipc.Client$Connection.access$2900(Client.java:376)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1529)

at org.apache.hadoop.ipc.Client.call(Client.java:1452)

... 29 more

2021-06-12 16:34:27,596 ERROR [master/ubuntu:16000:becomeActiveMaster] master.HMaster: ***** ABORTING master ubuntu,16000,1623486862761: Unhandled exception. Starting shutdown. *****

----------------------------------------------------------------------

发现是“java.net.ConnectException: Connection refused”错误

分析解决过程:

1.最初以为是my账号的权限问题,反复修改配置相关权限,多次尝试无果 ;

2.反复修改/etc/hosts 文件的配置,多次尝试无果;3,

3. 经百度查询,开始以为是/etc/hosts文件配置的问题,发现与网上的说明一致,配置并无异常;

4.一步一步检查发现在hadoop的配置文件core-site.xml 里有如下

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

初一看这里也没有问题,尝试改成如下后,问题解决

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.72.130:9000</value>

</property>

5.重新配置后,启动hadoop--->start-hbase.sh ---->hbase shell

发现可正常连接到HDFS,并可执行基本命令

至此问题解决!soga

-----20220816更新

参考文章:https://blog.csdn.net/weixin_42965737/article/details/116091854hbase中启动master后,自动关闭的原因详解_墨者大数据的博客-CSDN博客_hbase启动后自动关闭

作者真的强大

hbase-site.xml 里的

<property>

<name>hbase.rootdir</name>

<value>hdfs://hadoop101:9000/HBase</value>

</property>

必须与hadoop core-site.xml里的

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop101:9000</value>

</property>

保持完全一致

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)