解决报错:train.py: error: unrecognized arguments: --local-rank=1 ERROR:torch.distributed.elastic.multipr

·

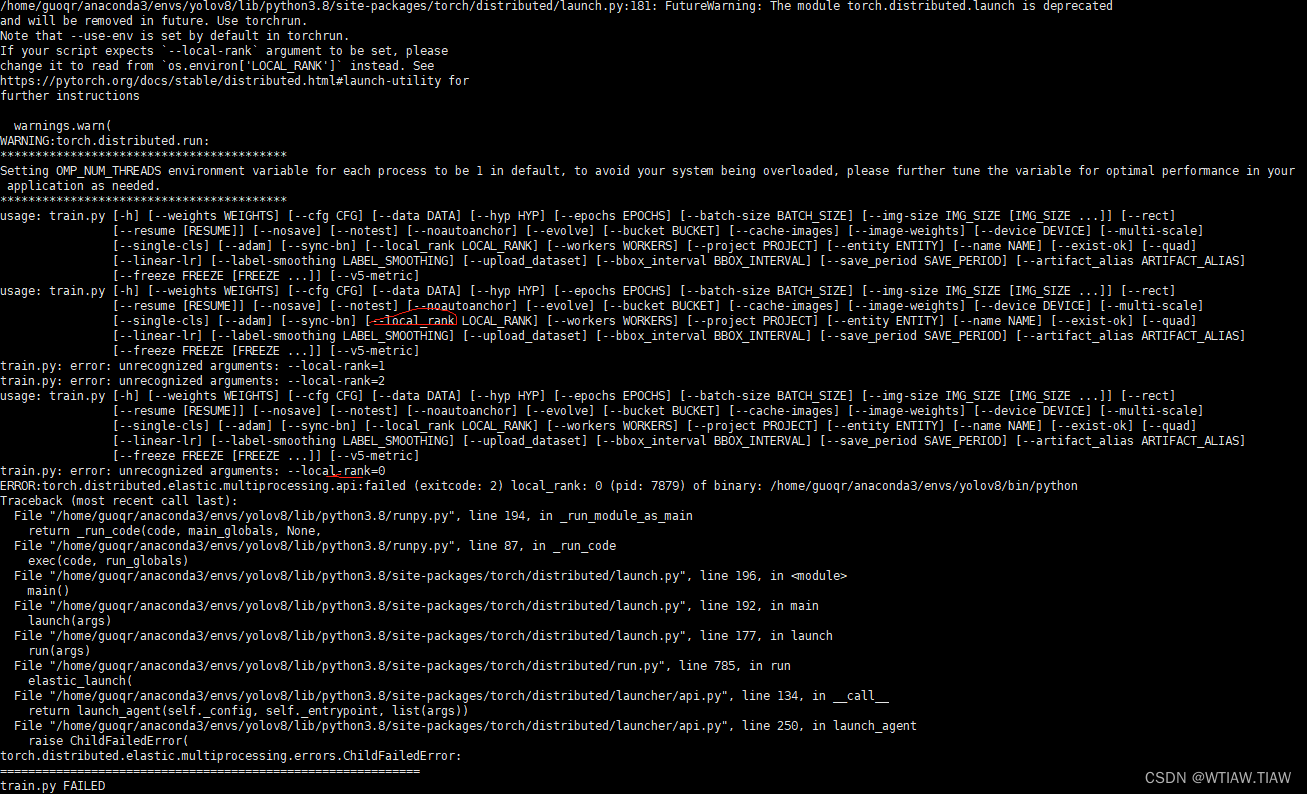

报错如下:

warnings.warn(

WARNING:torch.distributed.run:

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

usage: train.py [-h] [--weights WEIGHTS] [--cfg CFG] [--data DATA] [--hyp HYP] [--epochs EPOCHS] [--batch-size BATCH_SIZE]

[--img-size IMG_SIZE [IMG_SIZE ...]] [--rect] [--resume [RESUME]] [--nosave] [--notest] [--noautoanchor] [--evolve] [--bucket BUCKET]

[--cache-images] [--image-weights] [--device DEVICE] [--multi-scale] [--single-cls] [--adam] [--sync-bn] [--local_rank LOCAL_RANK]

[--workers WORKERS] [--project PROJECT] [--entity ENTITY] [--name NAME] [--exist-ok] [--quad] [--linear-lr]

[--label-smoothing LABEL_SMOOTHING] [--upload_dataset] [--bbox_interval BBOX_INTERVAL] [--save_period SAVE_PERIOD]

[--artifact_alias ARTIFACT_ALIAS] [--freeze FREEZE [FREEZE ...]] [--v5-metric]

usage: train.py [-h] [--weights WEIGHTS] [--cfg CFG] [--data DATA] [--hyp HYP] [--epochs EPOCHS] [--batch-size BATCH_SIZE]

[--img-size IMG_SIZE [IMG_SIZE ...]] [--rect] [--resume [RESUME]] [--nosave] [--notest] [--noautoanchor] [--evolve] [--bucket BUCKET]

[--cache-images] [--image-weights] [--device DEVICE] [--multi-scale] [--single-cls] [--adam] [--sync-bn] [--local_rank LOCAL_RANK]

[--workers WORKERS] [--project PROJECT] [--entity ENTITY] [--name NAME] [--exist-ok] [--quad] [--linear-lr]

[--label-smoothing LABEL_SMOOTHING] [--upload_dataset] [--bbox_interval BBOX_INTERVAL] [--save_period SAVE_PERIOD]

[--artifact_alias ARTIFACT_ALIAS] [--freeze FREEZE [FREEZE ...]] [--v5-metric]

train.py: error: unrecognized arguments: --local-rank=2

train.py: error: unrecognized arguments: --local-rank=0

usage: train.py [-h] [--weights WEIGHTS] [--cfg CFG] [--data DATA] [--hyp HYP] [--epochs EPOCHS] [--batch-size BATCH_SIZE]

[--img-size IMG_SIZE [IMG_SIZE ...]] [--rect] [--resume [RESUME]] [--nosave] [--notest] [--noautoanchor] [--evolve] [--bucket BUCKET]

[--cache-images] [--image-weights] [--device DEVICE] [--multi-scale] [--single-cls] [--adam] [--sync-bn] [--local_rank LOCAL_RANK]

[--workers WORKERS] [--project PROJECT] [--entity ENTITY] [--name NAME] [--exist-ok] [--quad] [--linear-lr]

[--label-smoothing LABEL_SMOOTHING] [--upload_dataset] [--bbox_interval BBOX_INTERVAL] [--save_period SAVE_PERIOD]

[--artifact_alias ARTIFACT_ALIAS] [--freeze FREEZE [FREEZE ...]] [--v5-metric]

train.py: error: unrecognized arguments: --local-rank=1

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 2) local_rank: 0 (pid: 27361) of binary: /home/guoqr/anaconda3/envs/yolov8/bin/python

Traceback (most recent call last):

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/launch.py", line 196, in <module>

main()

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/launch.py", line 192, in main

launch(args)

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/launch.py", line 177, in launch

run(args)

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/run.py", line 785, in run

elastic_launch(

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/guoqr/anaconda3/envs/yolov8/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

train.py FAILED

------------------------------------------------------------

Failures:

[1]:

time : 2023-04-20_20:49:25

host : wsn-Server1F

rank : 1 (local_rank: 1)

exitcode : 2 (pid: 27362)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[2]:

time : 2023-04-20_20:49:25

host : wsn-Server1F

rank : 2 (local_rank: 2)

exitcode : 2 (pid: 27364)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2023-04-20_20:49:25

host : wsn-Server1F

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 27361)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

由上图可以看出是–local_rank 与 --local-rank不一致导致的,追究原因,竟然是torch2.0版本launch.py里面写的全是–local-rank,而本yolov7源码用的是–local_rank

解决方法

第一种方法:把train.py里面的local_rank全改成local-rank

第二种方法:更换torch版本,我把torch版本换成1.13就好了。

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)